Introduction

LLMs are powerful, but they’re also forgetful.

Out of the box, they have no knowledge of your PDFs, chat logs, or product catalog. That’s where vector databases come in.

Vector databases are the memory layer of GenAI, especially for Retrieval-Augmented Generation (RAG) applications.

This post explains what vector DBs are, why they matter, and which AWS-native (or AWS-compatible) options to consider.

What Are Vector Databases?

Instead of storing data as rows and columns, vector databases store embeddings, numerical representations of text, images, or other data.

These embeddings live in high-dimensional space, which allows you to:

- Compare semantic meaning, not just keywords

- Retrieve similar context for GenAI prompts

- Power search, recommendations, and memory

Think: “Show me chunks of data that are like this question”

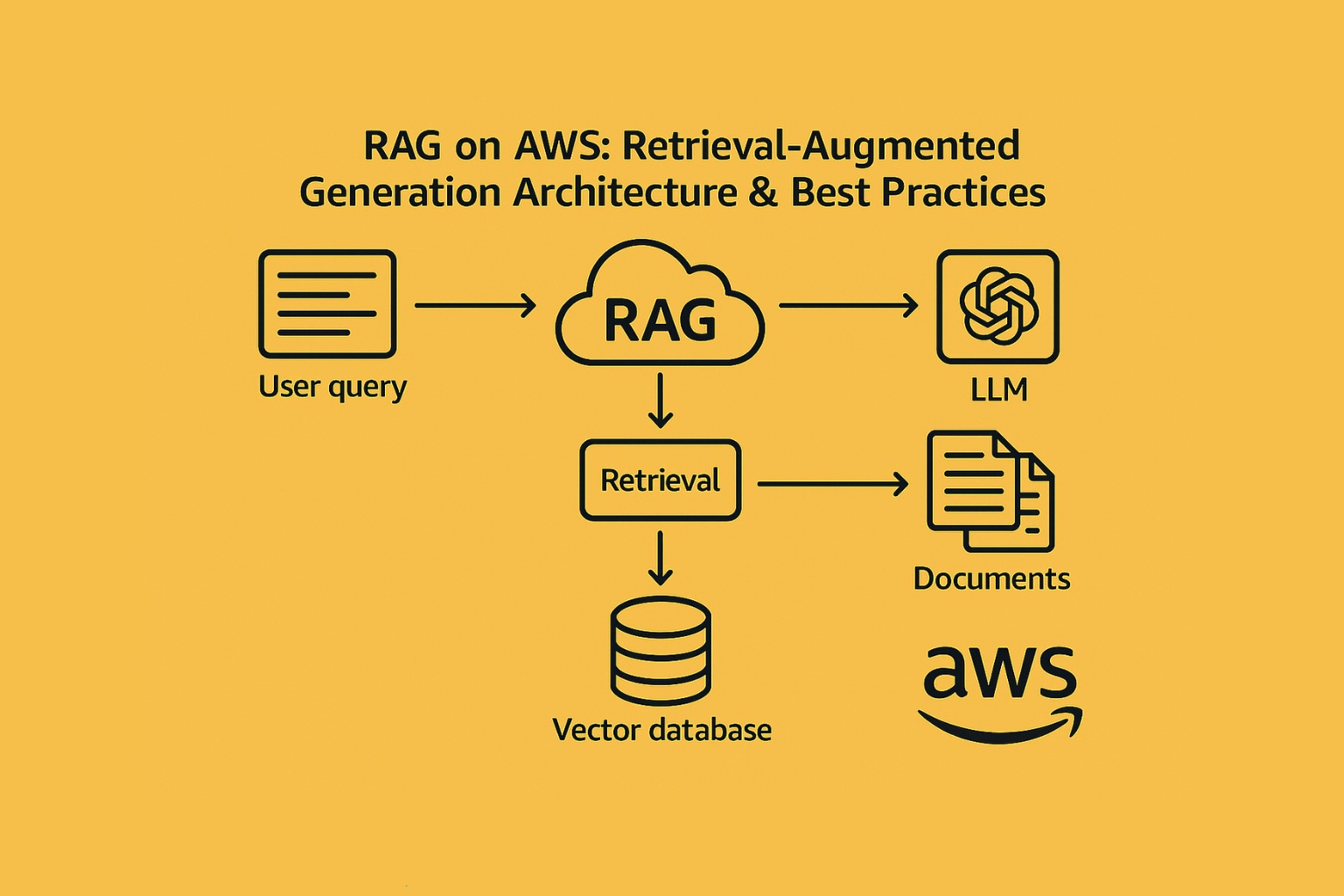

How Vector Databases Enable GenAI Workflows

Use case: A user asks a question.

You:

- Convert their query into a vector (embedding)

- Search your vector DB for closest matching chunks

- Feed those chunks into the prompt

- The LLM generates a response with better context

Result: The model sounds smart without being retrained.

Vector Database Options on AWS

| Option | Description | Best For |

|---|---|---|

| Amazon OpenSearch (KNN Plugin) | AWS-native, fully managed, supports hybrid keyword + vector search | SaaS platforms, enterprise apps |

| pgvector on Amazon RDS / Aurora | Postgres extension supporting vector search, ideal for teams already using Postgres | Teams with existing Postgres infrastructure |

| Pinecone / Weaviate (Self-hosted) | Hosted SaaS or containerized vector DBs offering high-performance similarity search | AI-native startups with scale needs |

| Amazon Neptune ML | Graph database with vector similarity capabilities | Knowledge graphs, entity resolution, linked data use cases |

Use OpenSearch if you want AWS-native integration and scalable retrieval.

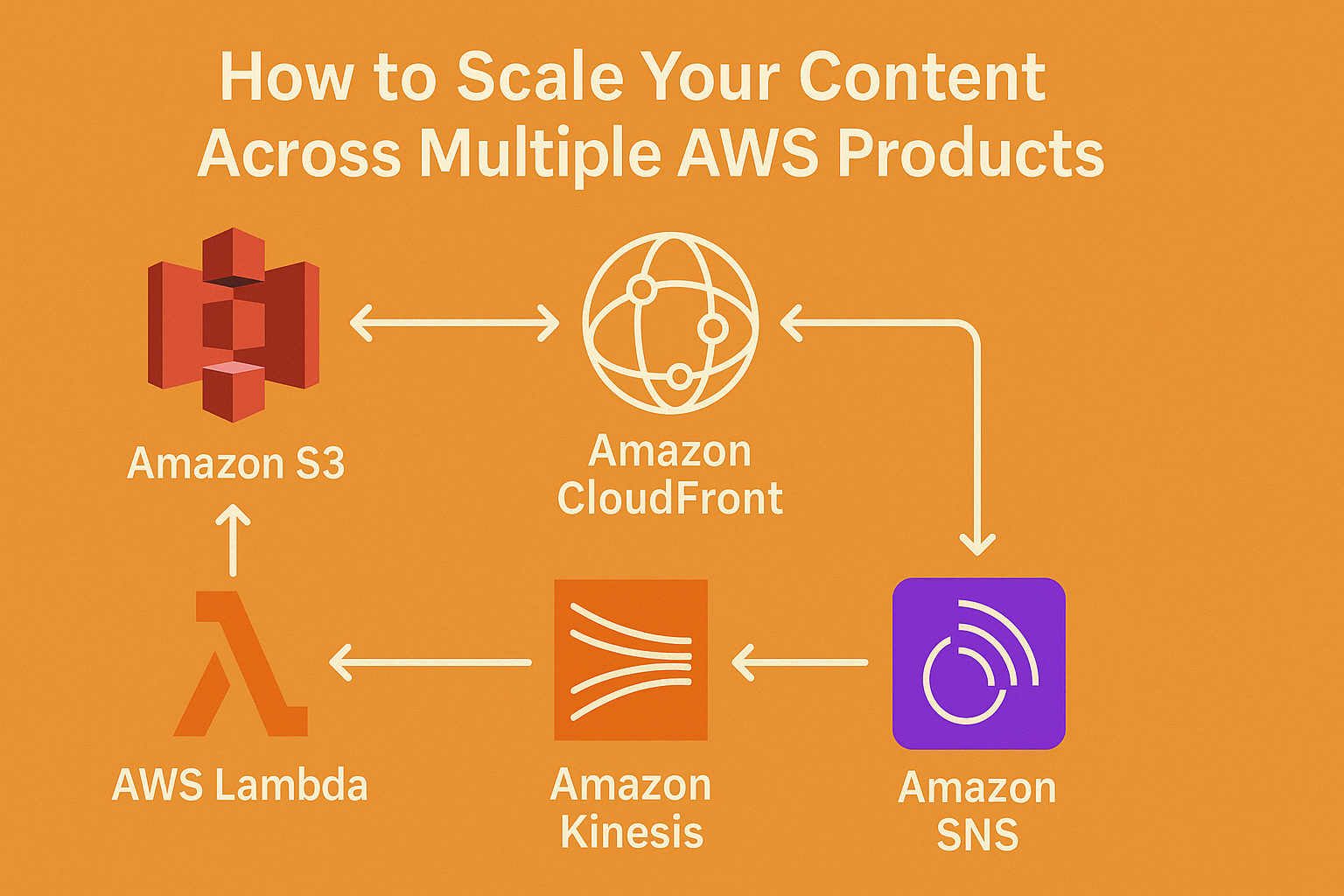

How to Build a Vector Pipeline in AWS

- Generate Embeddings

Use Titan Embeddings via Bedrock, or OpenAI/Instructor if external - Store Embeddings

Push into OpenSearch or pgvector with associated metadata (doc ID, chunk #, tags) - Query on User Prompt

Embed the prompt → run similarity search → return top-k chunks - Inject into Prompt Template

Format context + question → send to Claude / Titan / Llama2 - Track Performance

Use metrics like retrieval precision, token usage, hallucination rate

Security & Scaling Tips

- Use IAM roles + VPC access policies for OpenSearch

- Compress or normalize vectors before storage (L2 norms)

- Batch embeddings to reduce latency + API cost

- Add cache layer (e.g., Redis) for high-traffic queries

Conclusion

If your GenAI app relies on your data, you need a vector store.

Vector databases aren’t just infrastructure; they’re how your LLM gets context and delivers useful, non-hallucinated answers.

And with AWS-native tools like OpenSearch + pgvector, you can build this memory layer without reinventing your stack.