Introduction

Large language models are brilliant, but they forget things.

They can’t answer questions about your private docs or industry-specific data unless you fine-tune them or… use RAG.

RAG (Retrieval-Augmented Generation) is the fastest, safest way to make GenAI models useful for your data, without retraining anything.

In this post, we’ll explain how to build a RAG pipeline using AWS-native tools and how to avoid the most common mistakes.

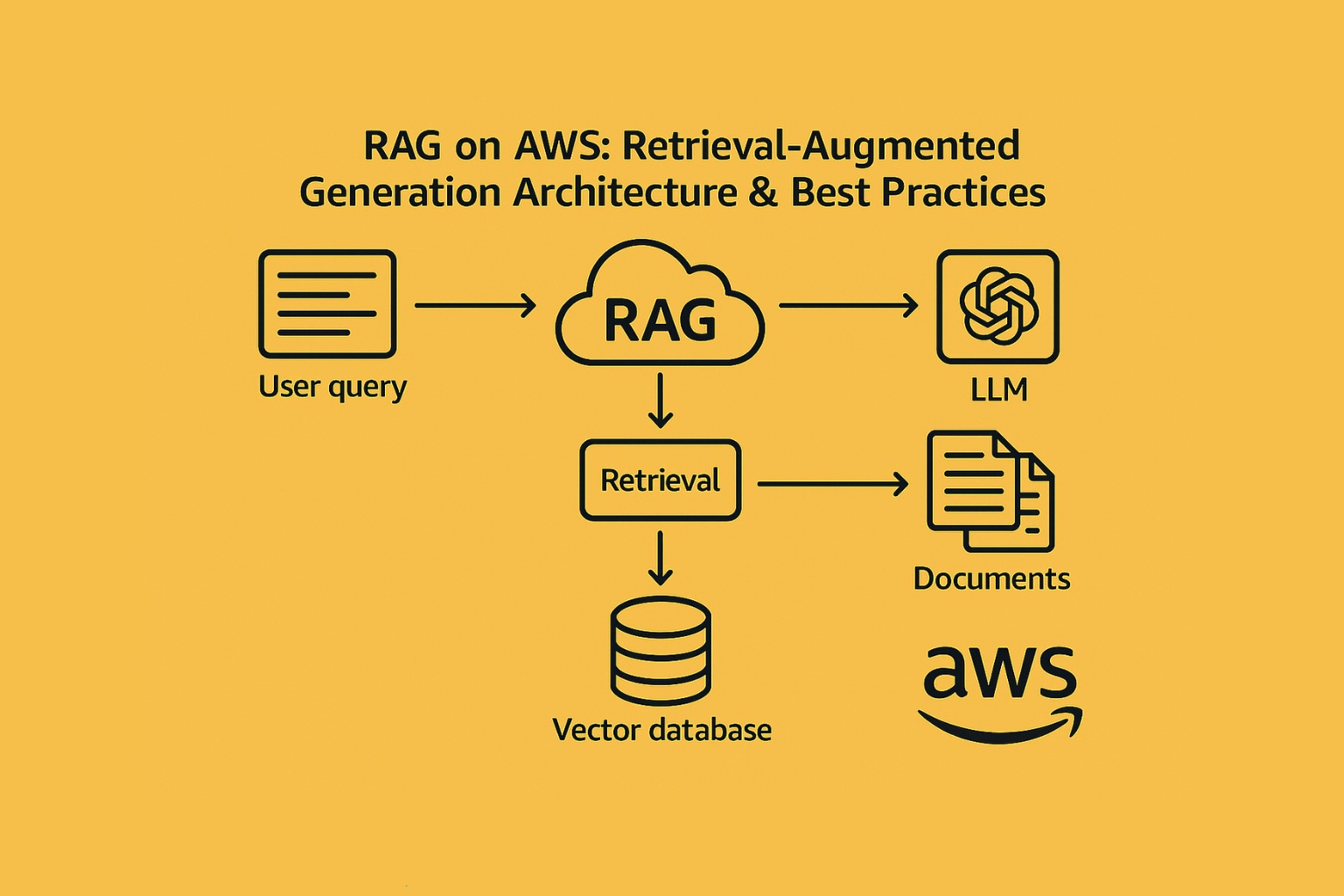

What Is RAG?

Retrieval-Augmented Generation = Search + Generation

Instead of relying on the model’s memory, you retrieve relevant chunks of your data and feed them into the prompt at runtime.

RAG allows you to:

- Use smaller, cheaper models

- Update your “knowledge base” without retraining

- Reduce hallucinations by grounding answers in real data

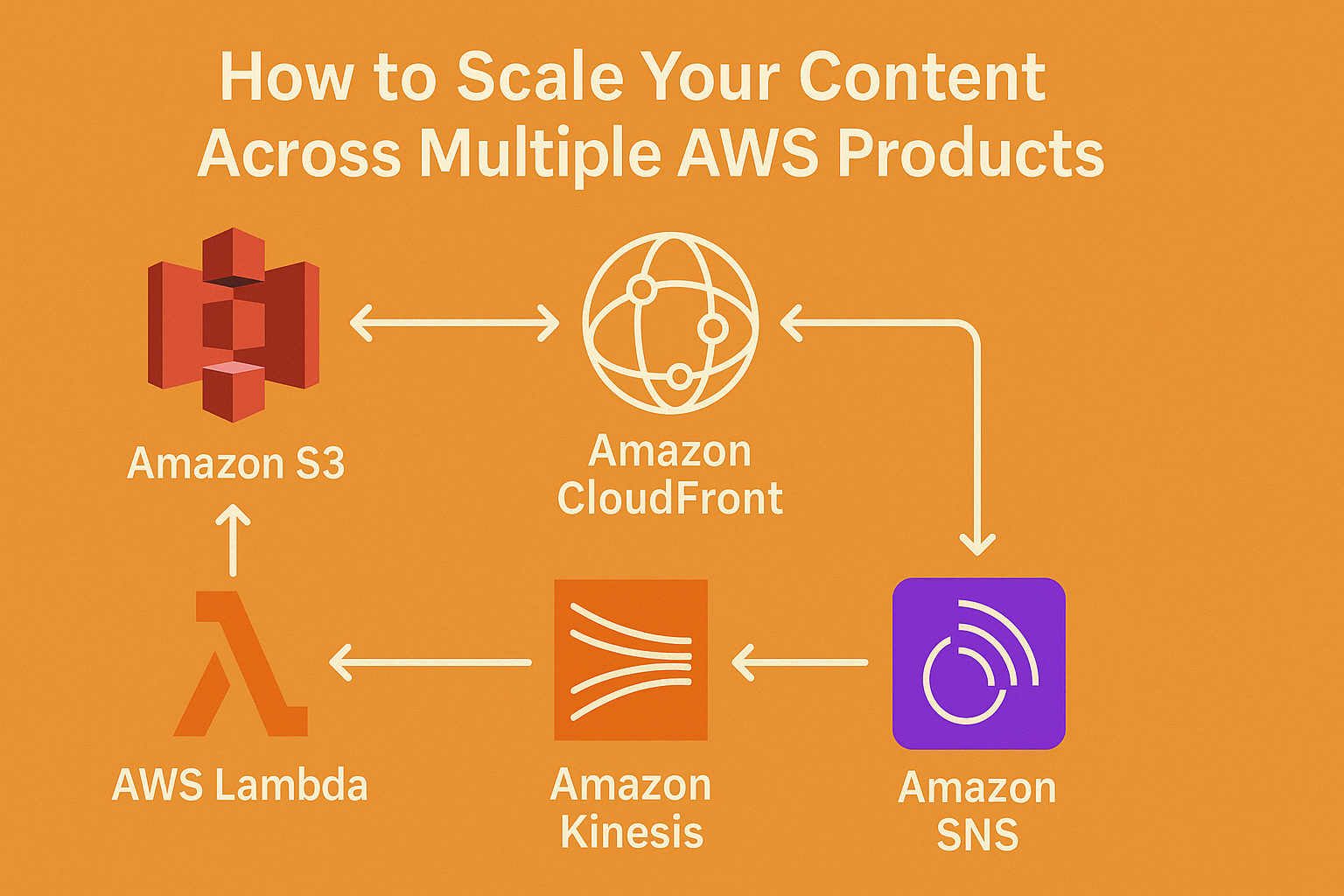

Core Components of a RAG System on AWS

| Step | AWS Service |

|---|---|

| 1. Store Documents | Amazon S3 |

| 2. Chunk & Embed | Titan Embeddings (via Bedrock) or SageMaker |

| 3. Vector DB | Amazon OpenSearch + KNN or RDS + pgvector |

| 4. Query + Retrieve | Lambda or LangChain on Bedrock |

| 5. Generate Answer | Amazon Bedrock (Claude, Titan, etc.) |

| 6. Output to UI | API Gateway, AppSync, or Lex |

RAG Pipeline Example

Let’s say you want to build a policy assistant that can summarize and answer questions about internal HR policies.

Architecture:

- Upload docs → S3

- Extract + chunk → Lambda

- Embed chunks → Titan Embeddings

- Store in OpenSearch

- User sends question → Lambda embeds it → retrieves top 5 similar chunks

- Chunks injected into prompt → Bedrock (Claude/Titan) generates answer

- Answer returned via REST or chatbot

No model training. Just smart prompt augmentation.

Best Practices for Building RAG on AWS

1. Use Metadata in Vector DB

- Add tags like doc_id, section, source

- Helps with filtering and audit trails

2. Keep Chunks ~200–500 tokens

- Too long = wasted tokens

- Too short = no context

- Aim for semantic balance

3. Preprocess With Purpose

- Remove headers, boilerplate, repeated phrases

- Use tools like LangChain’s RecursiveCharacterTextSplitter

4. Use Prompt Templates with Guardrails

Add instructions like:

“Only answer using the provided documents. If unsure, say you don’t know.”

Combine with Bedrock Guardrails for tone & output control

5. Log & Evaluate

Track:

- Retrieval accuracy (was the answer in the context?)

- Response quality (helpful, safe, accurate?)

- Cost per query

- Use CloudWatch or Bedrock logs to monitor

Common RAG Pitfalls to Avoid

- Injecting irrelevant or low-quality chunks

- Not including source metadata in output

- Using vector search without re-ranking

- Prompt too vague (leads to hallucination)

- Underestimating context window/token limits

Bonus: Tools to Accelerate RAG on AWS

- LangChain + Bedrock

- Bedrock Agents (Preview)

- Haystack for pipeline orchestration

- SageMaker Ground Truth for data labeling

- OpenSearch ML Inference + scoring

Conclusion

RAG is the bridge between foundation models and your data.

And on AWS, it’s easier than ever to build securely, scalably, and cost-efficiently.

Want GenAI that actually answers questions?

Build a RAG pipeline and stop hallucinating.