How a multi‑year, multi‑billion‑dollar cloud partnership signals a new era in frontier AI

Setting the scene

In early November 2025, OpenAI and AWS announced a major multi‑year compute‑infrastructure partnership. According to multiple sources, the deal is reportedly valued at around US $38 billion.

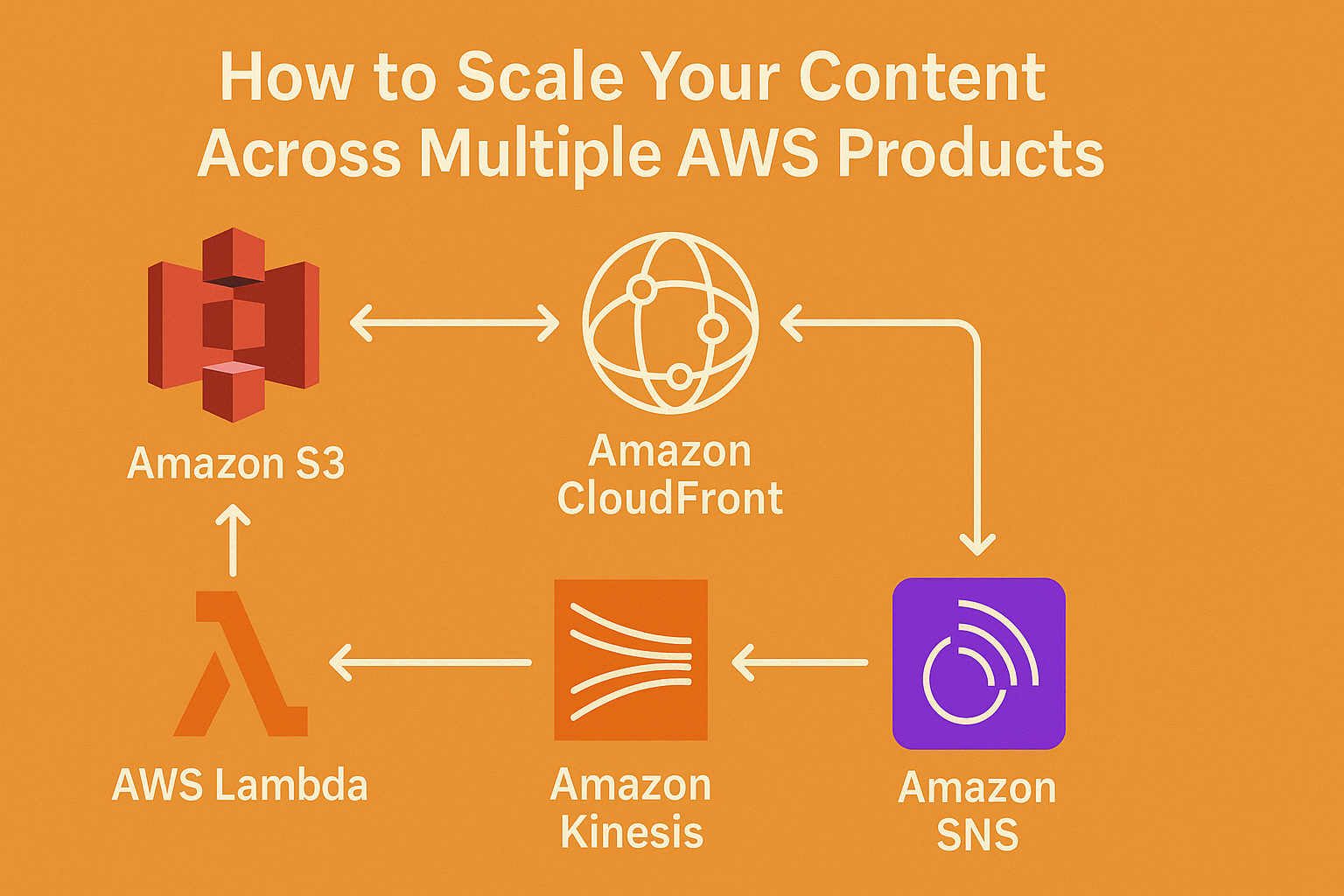

Under the agreement, OpenAI gains immediate access to AWS’s high‑end infrastructure (including hundreds of thousands of NVIDIA GPUs, and the capability to scale to tens of millions of CPUs) to support the training and inference of its large‑scale AI models.

The collaboration also marks OpenAI’s first major large‑scale infrastructure deal outside of its prior deep reliance on Microsoft’s Azure cloud platform.

And from AWS’s perspective, the partnership signals a stronger positioning in the AI infrastructure arms‑race, challenging rivals in the cloud/AI space.

Why this deal matters

1. Compute becomes the cornerstone for frontier AI

As OpenAI’s CEO noted: “Scaling frontier AI requires massive, reliable compute.”

In practical terms:

- OpenAI needs enormous clusters of GPUs/CPUs to train ever‑larger models, to serve growing user demand, and to deploy inference at scale.

- AWS has been investing heavily in infrastructure to support this kind of workload: hybrid CPU/GPU clusters, networking at scale, and specialised chips/architectures.

- The deal thereby reduces a bottleneck for OpenAI and gives AWS a flagship partner that validates its infrastructure for cutting‑edge AI.

2. Strategic shift in cloud vendor relationships

Historically, OpenAI’s compute partner landscape was heavily tied to Microsoft/Azure. The move to AWS indicates:

- OpenAI is diversifying its infrastructure base, reducing the risk of single‑supplier dependence.

- AWS is being accepted as a top‑tier platform for frontier AI workloads, not just enterprise cloud.

- It signals greater competition among cloud providers (AWS, Microsoft Azure, Google Cloud, Oracle, etc) to win AI‑model training & deployment business.

3. Implications for enterprise AI and ecosystem

For enterprises and developers, this partnership has ripple‑effects:

- Users of AWS will have better access (directly or indirectly) to advanced OpenAI models, via AWS infrastructure, potentially smoother integration into existing AWS services.

- It strengthens AWS’s position in offering ‘AI as a platform’, not just infrastructure, but models + services + deployment.

- It may accelerate adoption of generative AI, agentic systems (AI that can perform multi‑step tasks) and domain‑specific large language models (LLMs) by lowering infrastructure barriers.

Key details of the deal

- Multi‑year agreement, valued at ~$38 billion.

- Immediate access to AWS’s infrastructure: hundreds of thousands of NVIDIA GPUs, large CPU fleets.

- Deployment ramp‑up scheduled to complete by end of 2026, with possible expansions into 2027.

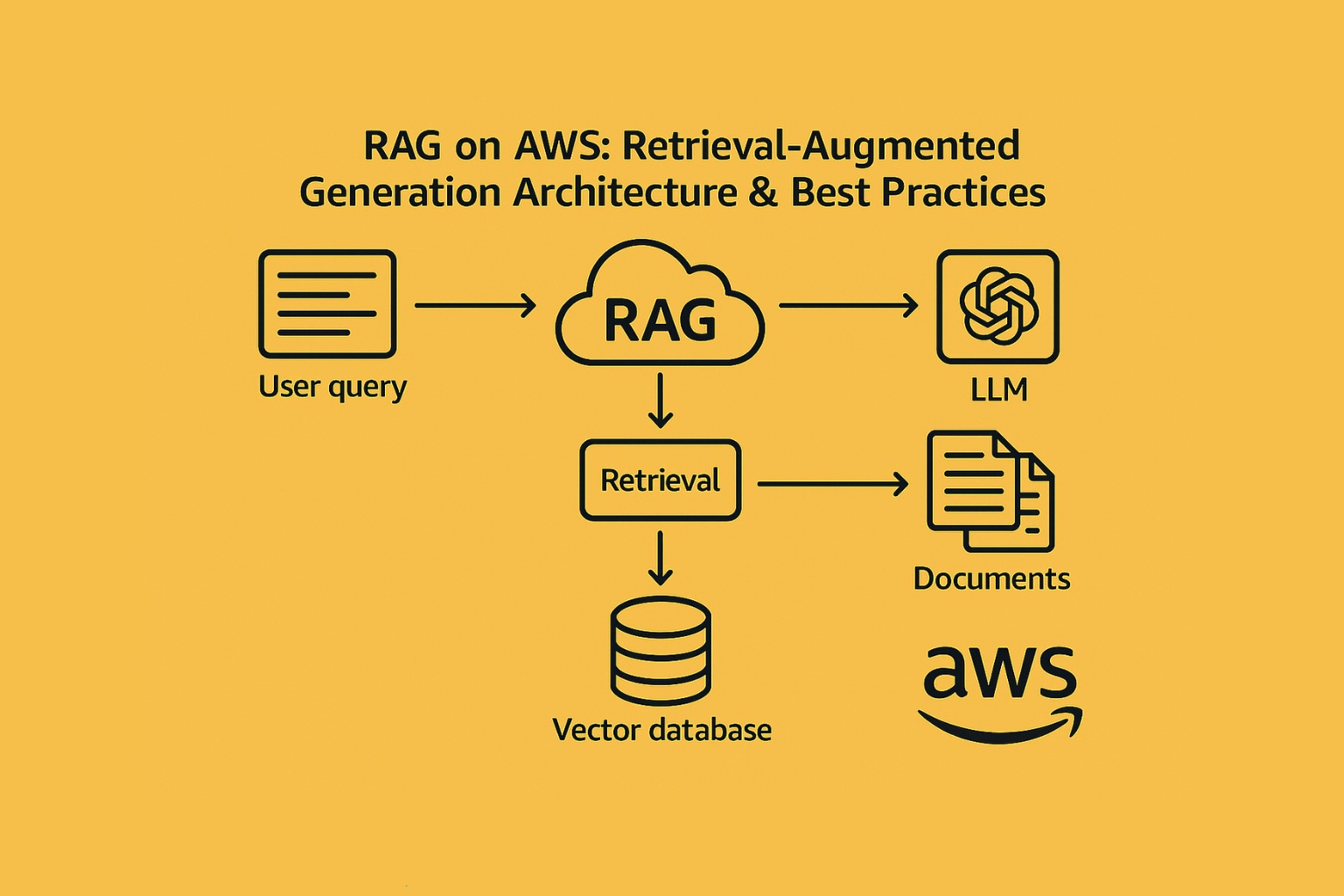

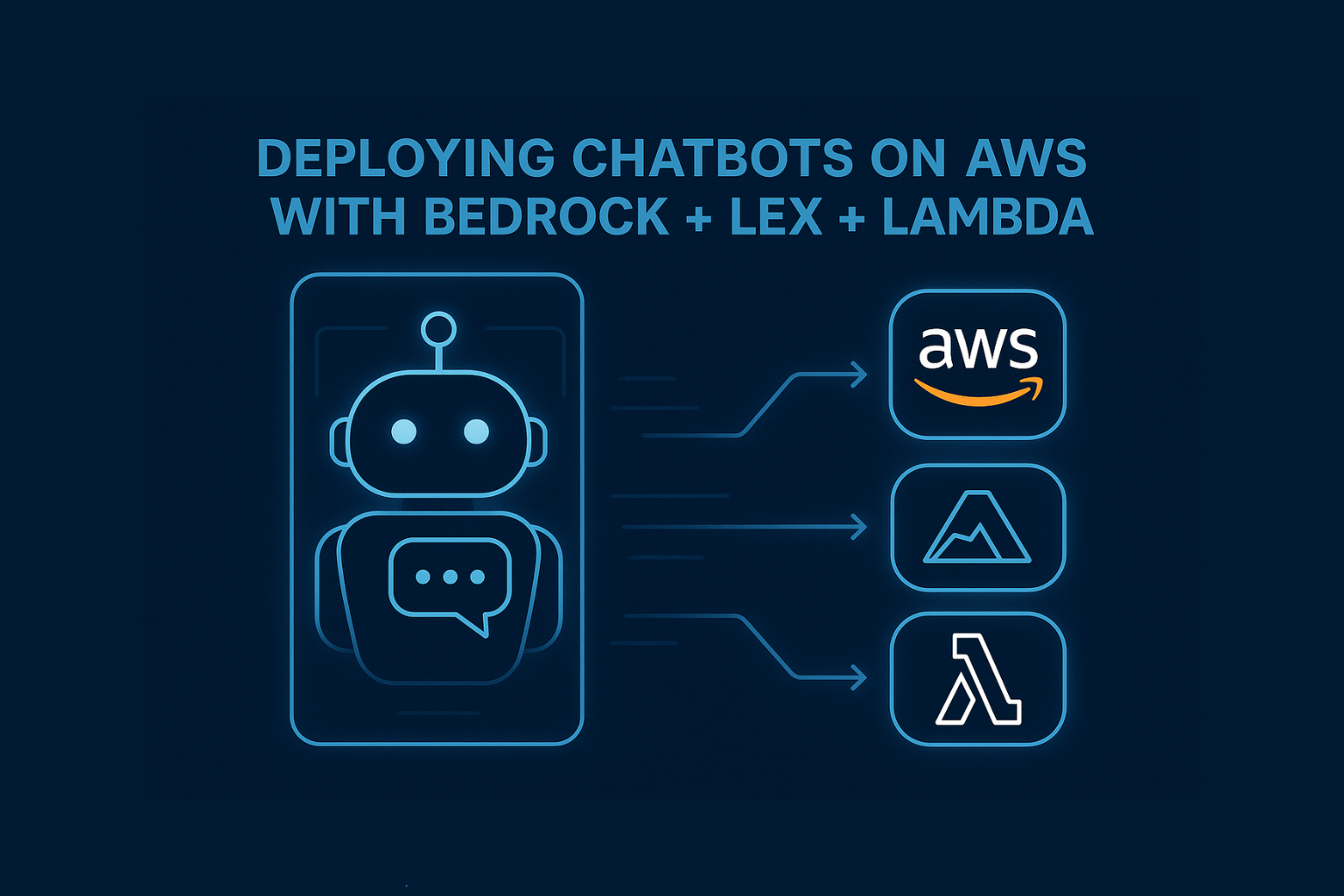

- Inclusion of model‑availability for enterprises via AWS services: OpenAI models integrated into AWS’s AI platform stack (e.g., via Amazon Bedrock / SageMaker) for the first time.

- Infrastructure challenges addressed: large‑scale clustering, network bandwidth, power/cooling, reliability, security, areas AWS emphasizes in its AI infrastructure writings.

What this means for different stakeholders

For OpenAI

- Scalability: With AWS’s massive capacity, OpenAI can train larger, more complex models, handle increased inference loads, and deploy globally with improved responsiveness.

- Flexibility: Diversifying compute partner mitigates risks of supply‑chain or vendor lock‑in. It may give OpenAI more leverage in future contracts.

- Market positioning: Aligning with AWS strengthens its credibility as a platform provider (for enterprises) and not only model maker.

For AWS

- Validation of AI leadership: Landing a high‑profile partner like OpenAI showcases AWS’s infrastructure as competitive for frontier AI.

- New revenue stream: Beyond standard cloud services, AI infrastructure deals of this size generate long‑term revenue and deepen customer commitment.

- Ecosystem strengthening: By integrating OpenAI models into its stack, AWS can further lock in enterprise customers, add value‑added services, and compete more effectively with Azure/Google.

For enterprises / developers

- Access to advanced models and infrastructure: With OpenAI models available via AWS, companies already using AWS will find integration easier.

- Reduced barrier‑to‑entry: Access to top‑tier compute + models means smaller firms/teams can build more advanced AI solutions without building infrastructure from scratch.

- Competitive differentiation: Organizations that adopt early may gain advantages via agentic AI workflows, complex LLM‑based automation, and domain‑specific AI.

For the cloud & AI ecosystem

- Cloud providers are being forced to scale infrastructure, invest in specialised hardware (GPUs, AI accelerators), optimise for networking and power. AWS’s blog post emphasises this infrastructure transformation.

- The “arms race” for compute is accelerating increasingly greater investments in data centres, chips, cooling, energy, for example this deal ties into the broader wave of AI infrastructure spending.

- Multi‑cloud or heterogeneous‑cloud strategies become more viable: AI model providers and enterprises may span multiple clouds, increasing flexibility and competition.

Challenges & Considerations

- Cost and economics: $38 billion is a massive commitment. The economics of training, fine‑tuning, and serving large models remain challenging. Return on investment will matter.

- Supply chain constraints: GPUs, accelerators, network fabric, power, cooling; these are all constrained resources. Scaling hundreds of thousands of GPUs is non‑trivial.

- Operational complexity: Large‑scale clusters for AI entail complex orchestration, fault‑tolerance, data transfer, security concerns (especially for enterprise/regulated sectors).

- Model risk: Even with infrastructure, success depends on model innovation, usability, data quality, fine‑tuning, and deployment. Infrastructure is necessary but not sufficient.

- Competitive response: Other cloud providers (Microsoft, Google, Oracle) will respond aggressively; enterprises may face a fast‑moving vendor landscape.

- Regulatory & ethical implications: As infrastructure scales, so do issues around model transparency, bias, safety, energy use, geopolitical risk. Partnerships of this scale attract regulatory scrutiny.

Why India / Asia‑Pacific should pay attention

Given your base in Mohali (Punjab, India) and the broad Asia‑Pacific technology ecosystem, a few regional angles are worth noting:

- Enterprises in India/Asia that use AWS and are building or adopting generative/agentic AI will benefit from this infrastructure wave, access to cutting‑edge models + services may become more accessible and cost‑efficient.

- Cloud‑driven AI growth may spur local innovation: startups, research labs, government initiatives can leverage global infrastructure partnerships, reducing the need to build everything in‑house.

- Talent and training demand will rise: as infrastructure becomes more available, organisations will need data scientists, ML engineers, AI operations specialists, which creates opportunity for the regional workforce.

- Energy/data‑centre footprint implications: large AI clusters consume significant power and may drive investments in regional data‑centres, regulatory frameworks, sustainability initiatives, India may need to prepare in terms of policy, infrastructure, and skill‑development.

What to do?

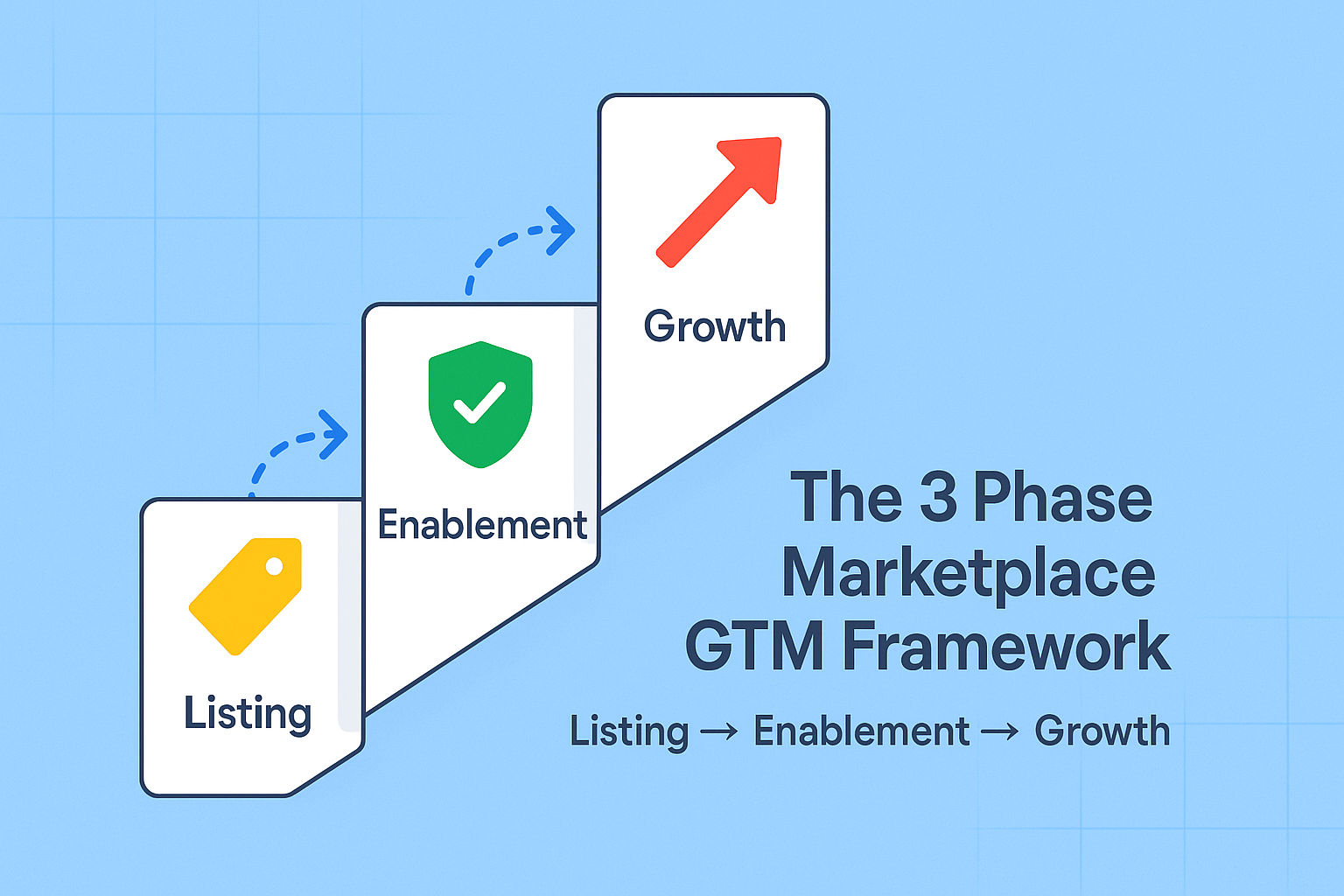

If you’re an enterprise leader, AI practitioner or decision‑maker, here are a few actionable steps:

- Audit your cloud/AI infrastructure strategy: Are you tied to a single vendor? Are you prepared for large‑scale model training & inference?

- Pilot advanced AI models: With OpenAI models becoming accessible via AWS, consider pilot projects that leverage these assets (e.g., agentic workflows, generative use‑cases) to test operational readiness.

- Upskill your team: Invest in training for AI infrastructure, MLOps, cost optimization, deployment, and security. Infrastructure scale requires more than just models.

- Stay vendor‑agnostic where possible: The landscape is evolving fast; prepare for multi‑cloud or hybrid‑cloud options and keep flexibility in your vendor lock‑in exposure.

- Monitor regulatory & sustainability trends: Large‑scale AI compute has implications for energy, data‑localization, governance, incorporate these into your strategy early.

Takeaway

The AWS–OpenAI partnership is more than a headline; it’s a signal that infrastructure is now the battleground for AI leadership.

As frontier models scale, access to compute becomes the new currency of innovation. For builders, founders, and enterprises, this means one thing:

The future of AI isn’t just about who has the best model, it’s about who can scale it, secure it, and deploy it fast.

If your infrastructure isn’t ready, your roadmap isn’t either.