Introduction

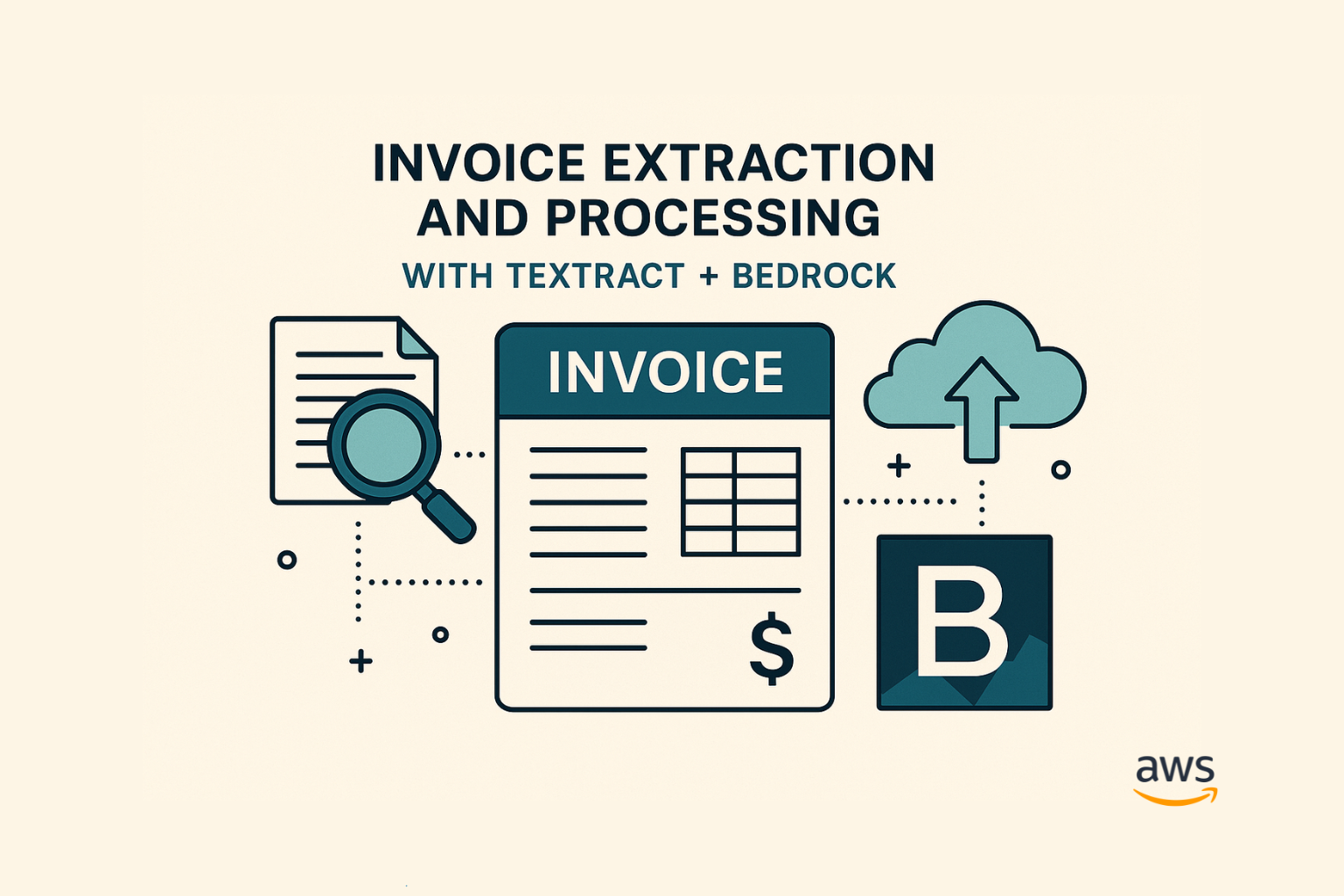

As GenAI moves from demos to production, security and compliance move from afterthought to foundation.

Whether you’re building a chatbot, document processor, or AI agent, trust matters.

And in regulated industries like finance, healthcare, or the public sector, it’s non-negotiable.

In this post, we’ll walk through the key security and compliance controls for building GenAI apps on AWS the right way.

Why GenAI Security Is Different

GenAI apps bring new risk vectors:

- Prompt injection

- Model leakage

- PII or IP embedded in outputs

- Sensitive data in logs

- External model APIs bypassing cloud controls

The fix: Design your AWS architecture with zero trust, least privilege, and observability in mind.

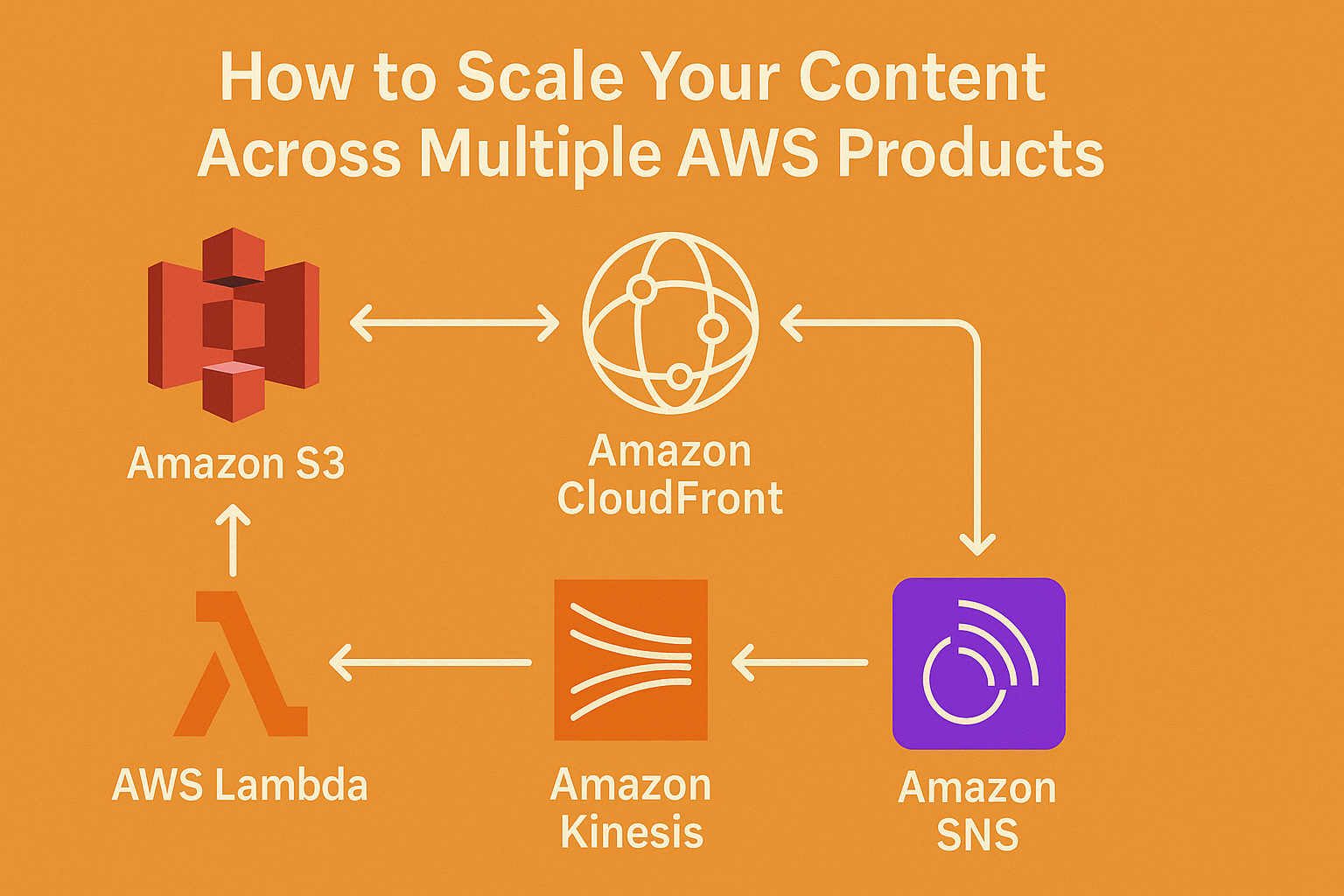

Core Security Components for GenAI Apps on AWS

| Component | Purpose |

|---|---|

| IAM Roles & Policies | Enforce least-privilege access to Bedrock, S3, OpenSearch, etc. |

| VPC Endpoints (PrivateLink) | Keep model invocations inside your network |

| KMS Encryption | Encrypt data at rest and in transit |

| CloudWatch + GuardDuty | Monitor prompt inputs, responses, and unusual activity |

| Bedrock Guardrails | Enforce output safety policies (tone, topic, security filters) |

| Data Loss Prevention (Macie) | Detect PII/PHI in prompt logs or retrieved content |

Compliance-Friendly Bedrock Features

| Feature | Benefit |

|---|---|

| No model training on inputs | Bedrock foundation models don’t use your prompts to improve models |

| SOC2, HIPAA, GDPR alignment | Bedrock is hosted within AWS compliance boundary |

| Model invocation logs | Can be routed to CloudWatch for full auditing |

| Fine-grained IAM controls | Decide who can invoke which model (e.g., Titan only, not Claude) |

| VPC support | Run inference securely, isolated from internet |

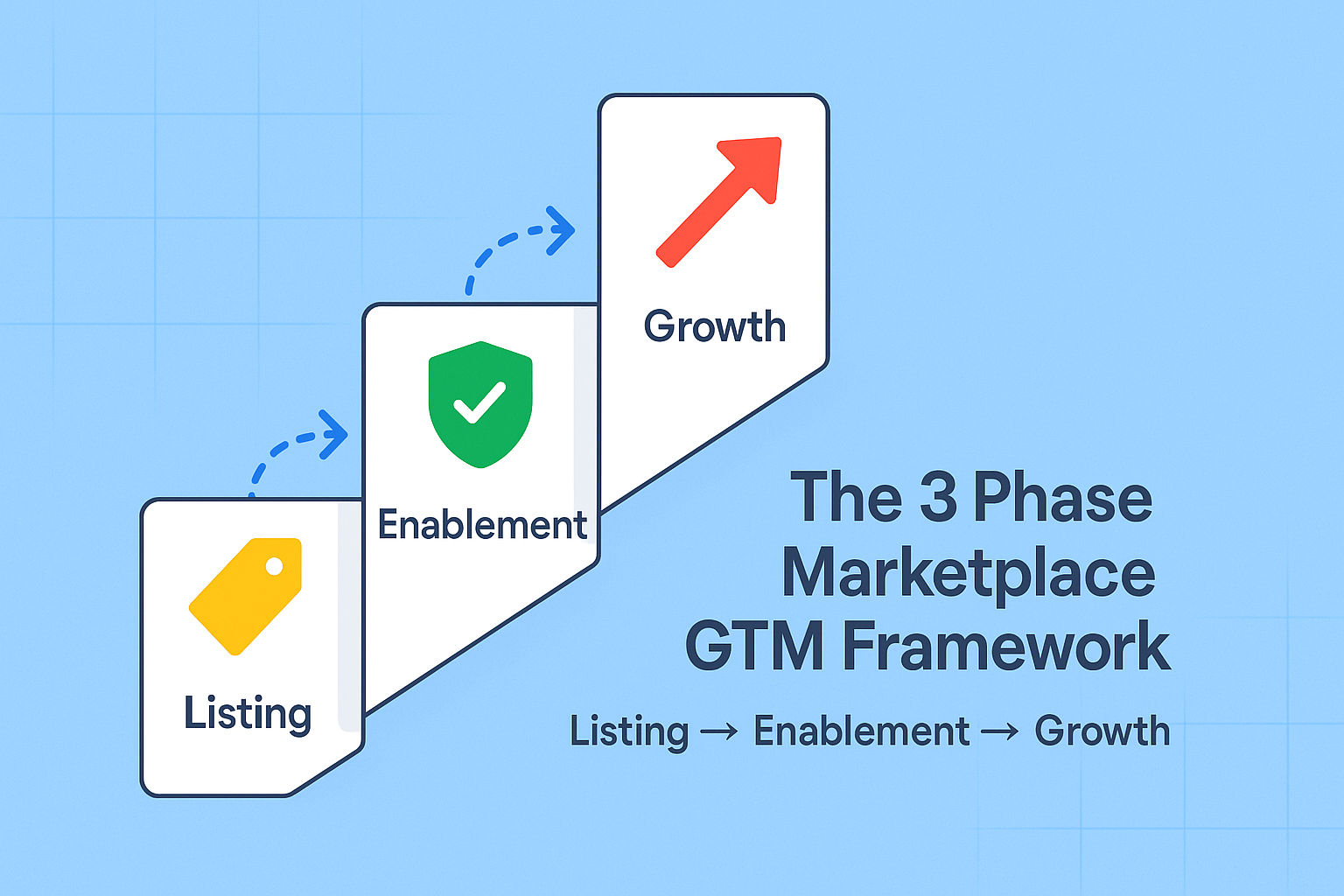

Security Design Patterns for GenAI Workloads

1. Private GenAI API Gateway

- Bedrock only accessible via Lambda in a VPC

- User input validated and logged before model call

- Guardrails and prompt templates enforced

- Outputs scanned before being displayed

2. SaaS + GenAI

- Multi-tenancy handled via IAM roles per tenant

- Vector DB (e.g., OpenSearch) scoped with tenant ID

- Prompts tagged with request IDs for traceability

3. Healthcare/Finance GenAI Assistant

- Run prompt sanitization layer (via Lambda or Step Functions)

- Add inline de-identification via Amazon Comprehend Medical or Macie

- Log all prompts/responses in encrypted S3 with object-level access policies

Red Flags to Avoid

- Logging raw prompts with customer names or contracts

- Using public APIs (like OpenAI) for production without data agreement

- Letting unverified users invoke models directly

- Bypassing model output review for user-facing responses

- Hardcoding API keys or role ARNs in Lambda

Quick Checklist

- Use Bedrock for closed model security guarantees

- Encrypt everything—at rest (S3, OpenSearch) + in transit (TLS 1.2+)

- Use IAM condition keys to control access per environment

- Sanitize logs and outputs with regex or Comprehend

- Enable Guardrails + output filters on Bedrock

- Set up anomaly detection for usage spikes

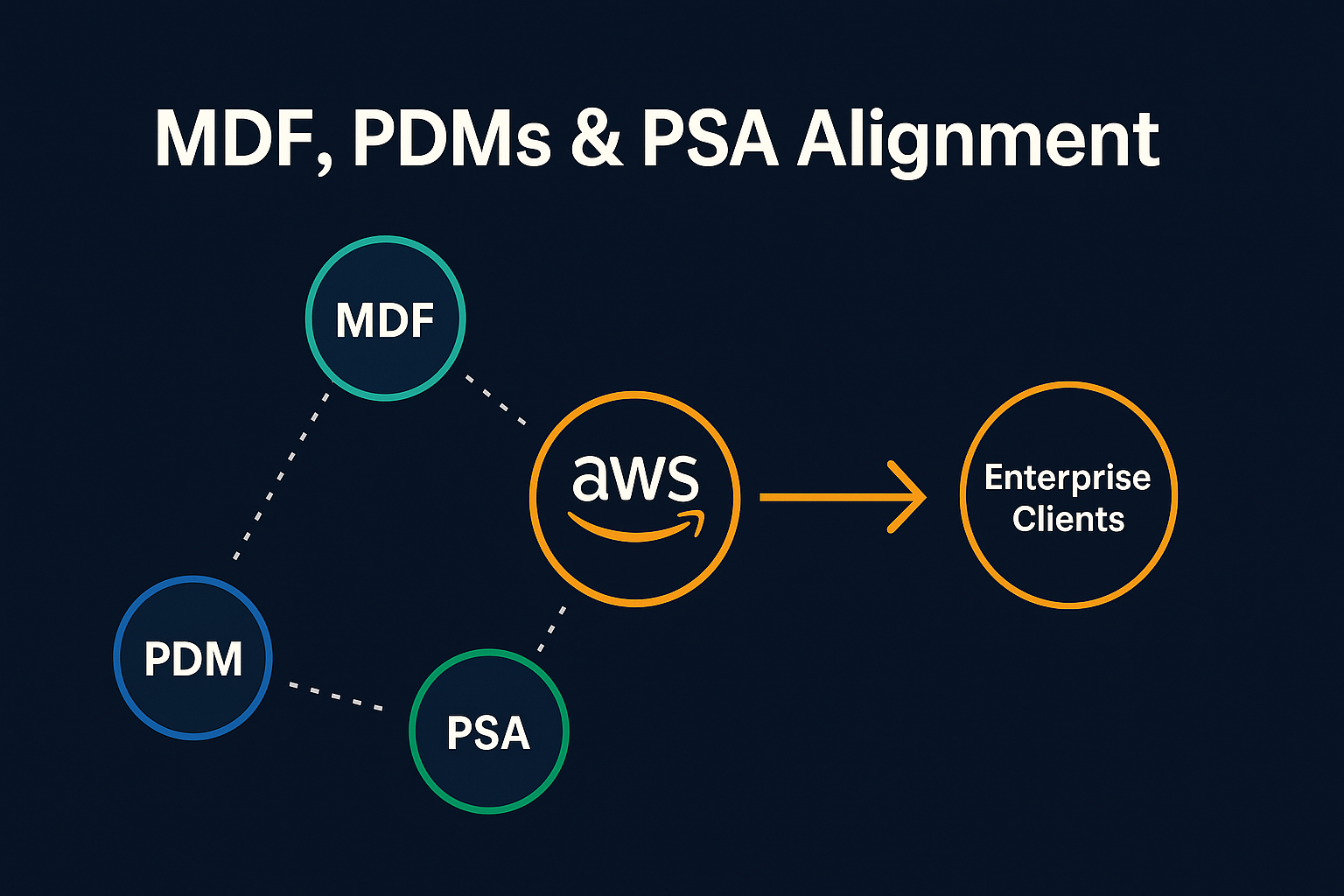

Bonus: Partner With AWS for Compliance Reviews

Work with your AWS Partner Manager or SA to:

- Get architecture validated

- Align with Well-Architected GenAI lens

- Review shared responsibility model for Bedrock, SageMaker, and Lex

Conclusion

Security isn’t just a checkbox in GenAI; it’s a core part of your product’s credibility.

And with tools like Bedrock Guardrails, IAM scoping, PrivateLink, and AWS-native encryption, you can build GenAI apps that are not only smart but secure, compliant, and enterprise-ready.