Introduction

So you’ve chosen your model, maybe Claude via Bedrock or Falcon on SageMaker.

Now the next question hits:

Should we fine-tune this model? Or can we just prompt it better?

Choosing between fine-tuning and prompt engineering isn’t just technical, it’s strategic.

Let’s explore when each approach makes sense in the AWS ecosystem, how they differ, and how to avoid the wrong investment.

What’s the Difference?

| Approach | What It Means |

|---|---|

| Prompt Engineering | Crafting better instructions, examples, or templates to guide model behavior, without changing the model itself |

| Fine-Tuning | Training the model on domain-specific data to adjust behavior, requires compute, data, and infrastructure |

When to Use Prompt Engineering

Use when you:

- Have limited data or no label set

- Want to guide model behavior without retraining

- Are using closed models like Claude, Titan, or Jurassic-2

- Need faster time-to-market

Tools to Use:

- Amazon Bedrock (supports Claude, Titan, etc.)

- LangChain or PromptLayer for chaining & tracking

- Prompt templates using f-strings or Jinja

- Bedrock Guardrails for controlling tone/safety

Examples:

- Rewriting emails in different tones

- Extracting structured info from user input

- Summarizing legal contracts into action points

Prompting is 10x cheaper, faster, and safer for most use cases.

When to Use Fine-Tuning

Use when you:

- Need your model to speak a domain-specific language

- Want the model to remember formats, terms, or workflows

- Are working with open models (Llama2, Falcon, Flan-T5)

- Have labeled training data ready

Tools to Use:

- SageMaker Training Jobs or JumpStart

- HuggingFace Transformers + SageMaker SDK

- Amazon S3 + SageMaker Pipelines for storing datasets

- Spot Training for cost savings

Examples:

- Medical chatbot trained on clinical terminology

- Legal doc generator that mirrors your format

- Support agents fine-tuned on ticket history

Caution:

- Fine-tuning requires GPU compute, evaluation, and versioning

- It can also introduce overfitting or unexpected behaviors if not QA’d properly

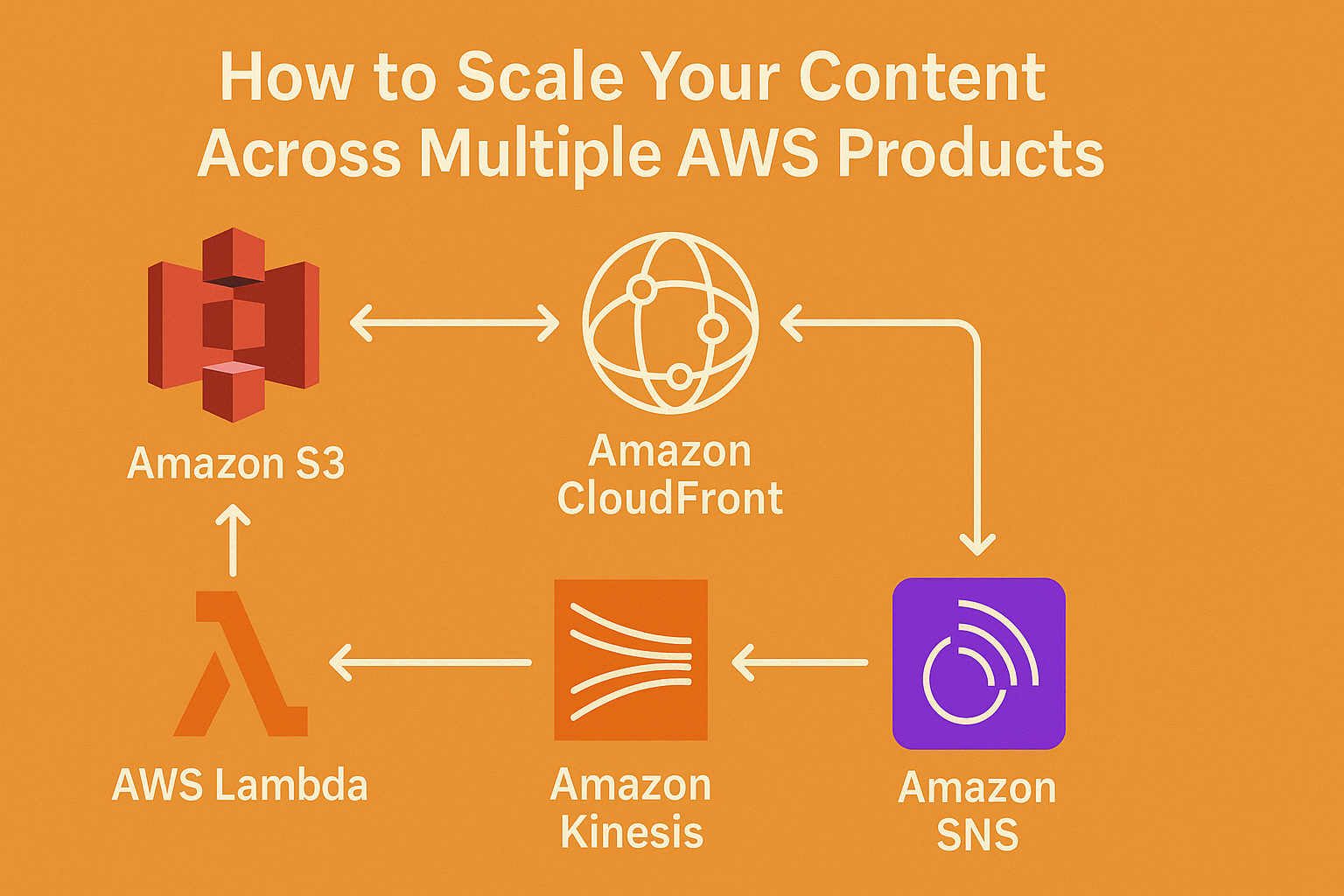

AWS Tip: Use Prompt Engineering First, Then Fine-Tune If Needed

A hybrid path:

- Start with prompt templates using Bedrock

- Log responses + user feedback

- Use that as training data to fine-tune a model later in SageMaker

- Switch to private hosting once cost or accuracy demands it

Common Mistakes

- Fine-tuning Claude/Titan (not allowed, they’re closed models)

- Fine-tuning when prompt chaining could’ve done the job

- Prompting too vaguely (“Summarize this” vs “Summarize this for a CFO in 3 bullet points”)

Conclusion

Think of prompting as steering the model.

Think of fine-tuning as rewiring the model.

In AWS, both are supported, but the right choice comes down to:

Time

Budget

Use case complexity

Access to training data

Start lean. Prompt first. Fine-tune when the ROI is clear.