Introduction

LLMs aren’t just for chatbots anymore.

From intelligent agents to contract review to personalized summaries, custom LLM applications are reshaping workflows across every industry.

But building one on AWS doesn’t have to be overwhelming.

In this post, we’ll walk you through a step-by-step architecture to launch your first custom LLM app using AWS-native tools.

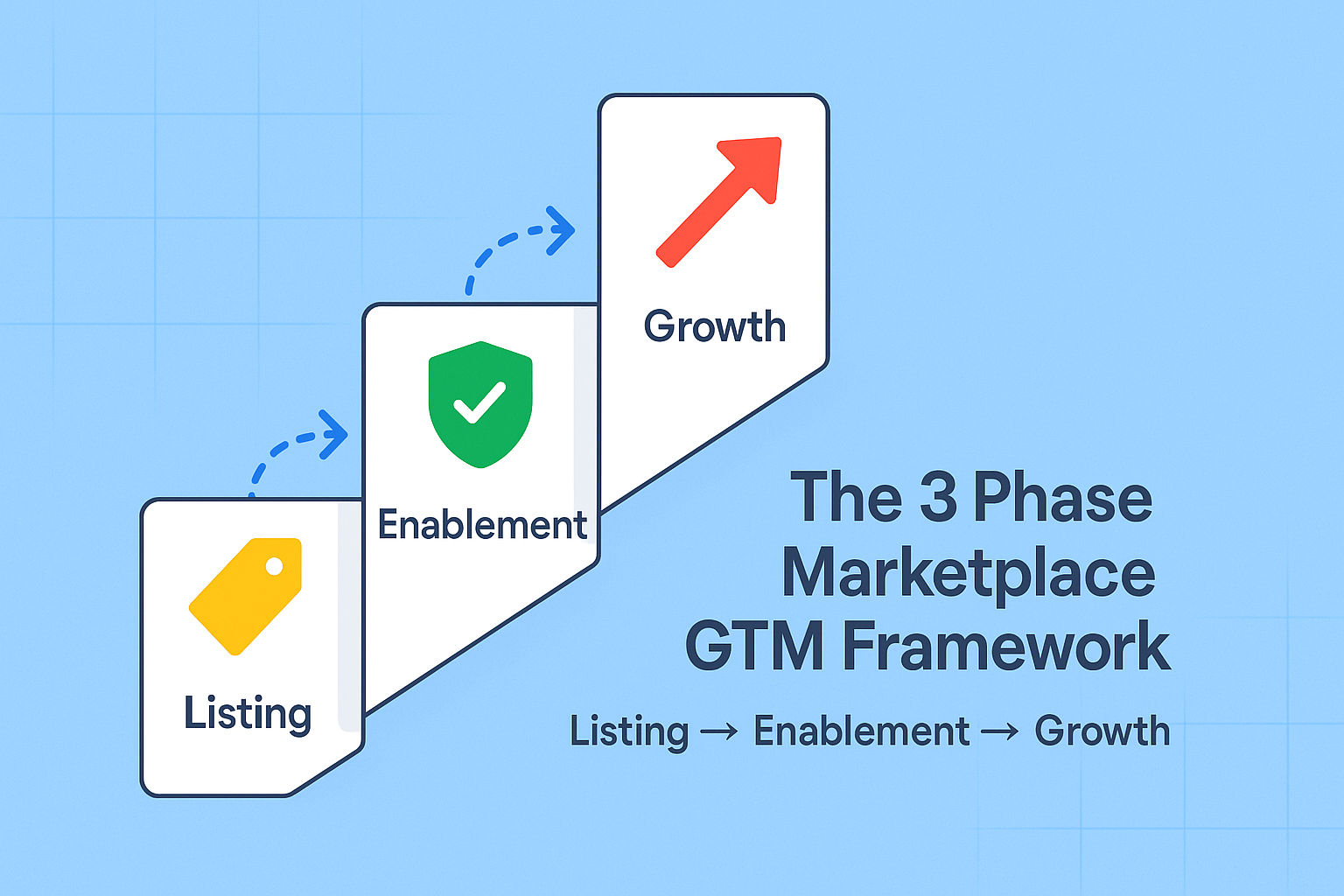

Step 1: Define the Use Case (Business-First, Always)

Before you choose models or services, answer:

What question will the user ask?

What data will the model rely on?

How do you want the output formatted?

Does it require external knowledge (RAG), private data, or fine-tuning?

Example Use Case:

“Summarize uploaded PDFs for legal teams and answer follow-up questions.”

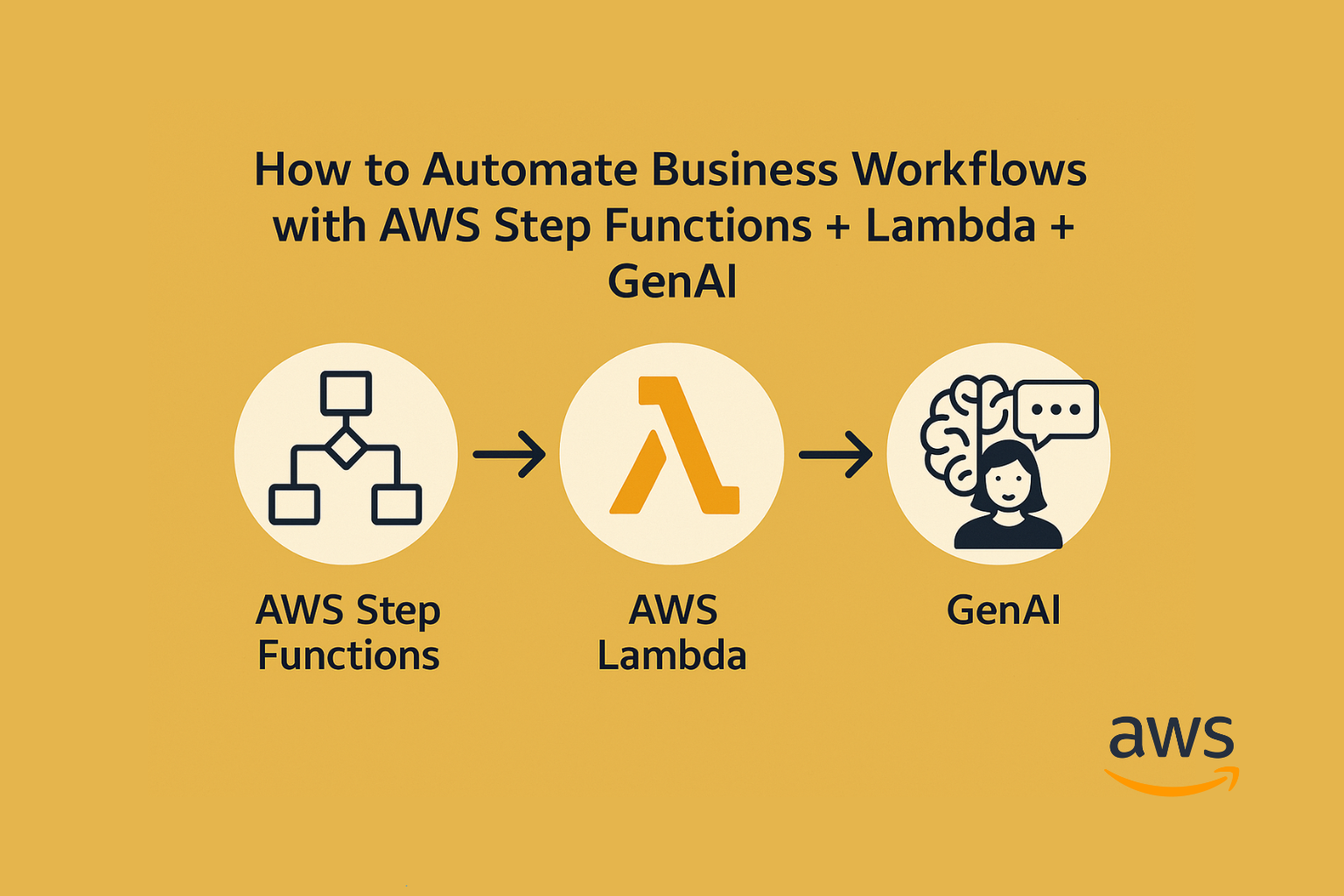

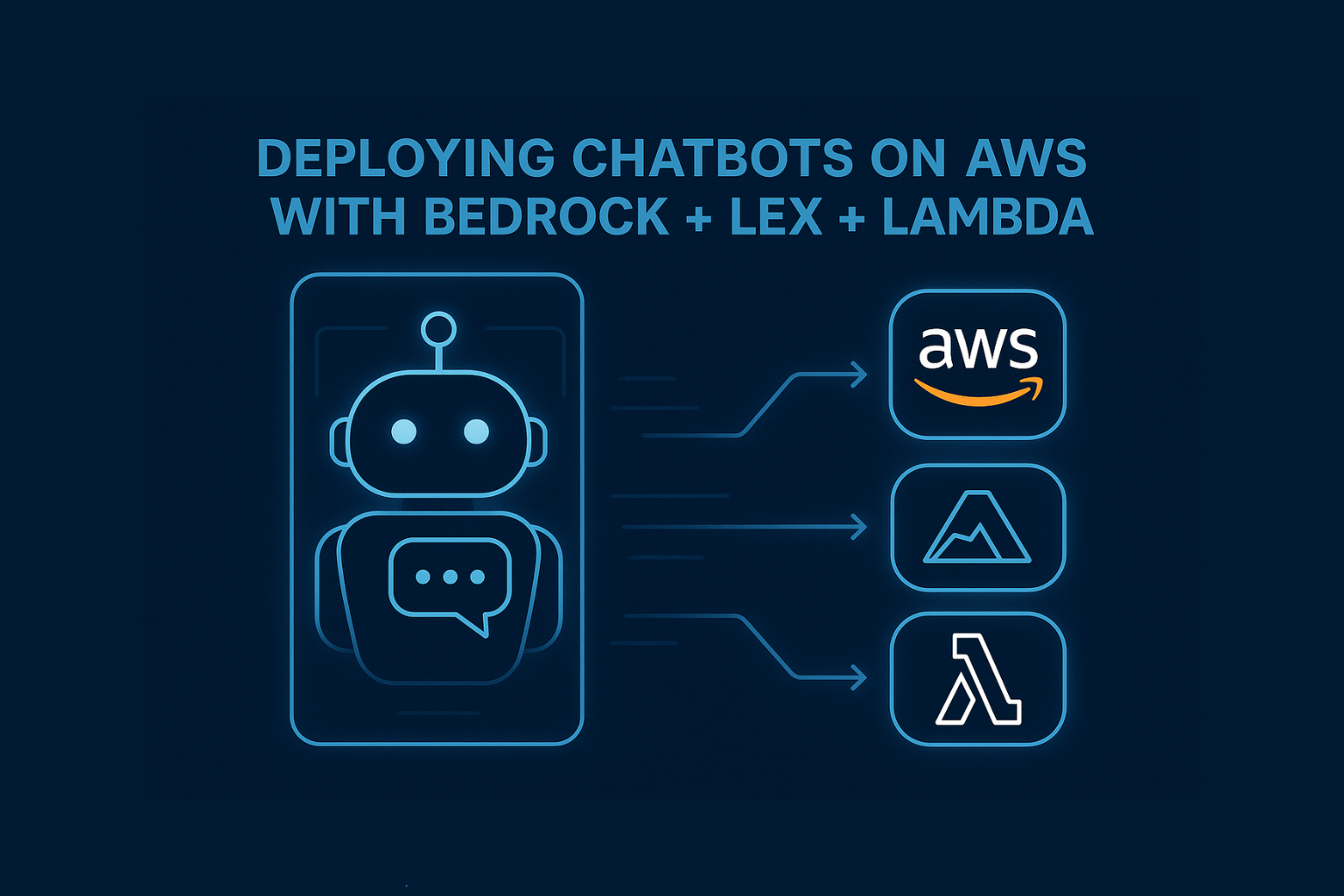

Step 2: Choose the Right AWS GenAI Stack

| Function | Tool |

|---|---|

| Foundation Model | Amazon Bedrock (Claude, Titan) or SageMaker (open-source LLMs) |

| Prompt Orchestration | LangChain + Bedrock SDK |

| Embeddings | Titan Embeddings or SageMaker JumpStart |

| Vector Store | Amazon OpenSearch + KNN Plugin or RDS + pgvector |

| App Frontend/API | Lambda + API Gateway + S3 (or AppSync if GraphQL) |

| Security Layer | IAM, PrivateLink, CloudTrail, KMS |

Start with Bedrock if you want fast iteration. Use SageMaker if you need full control.

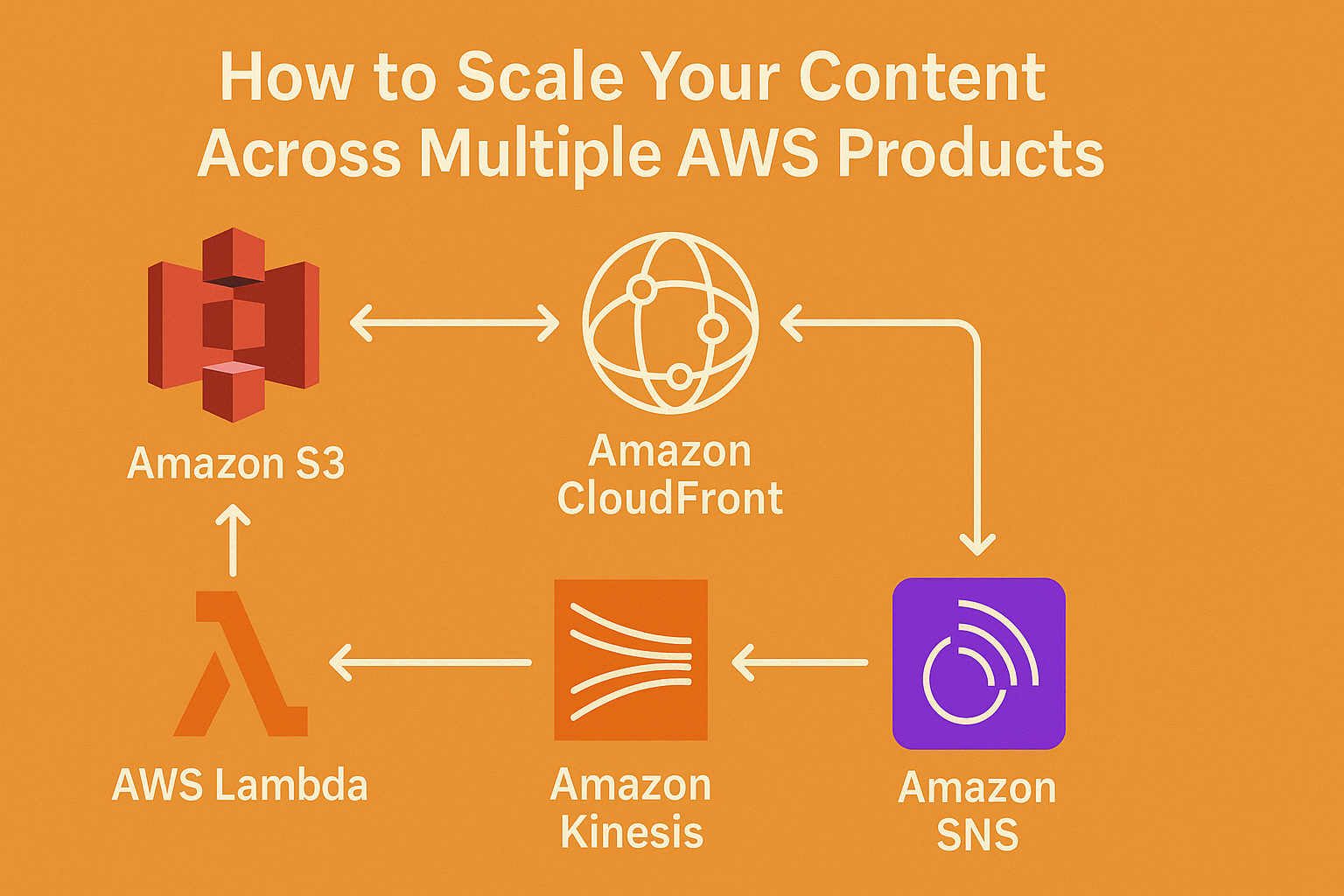

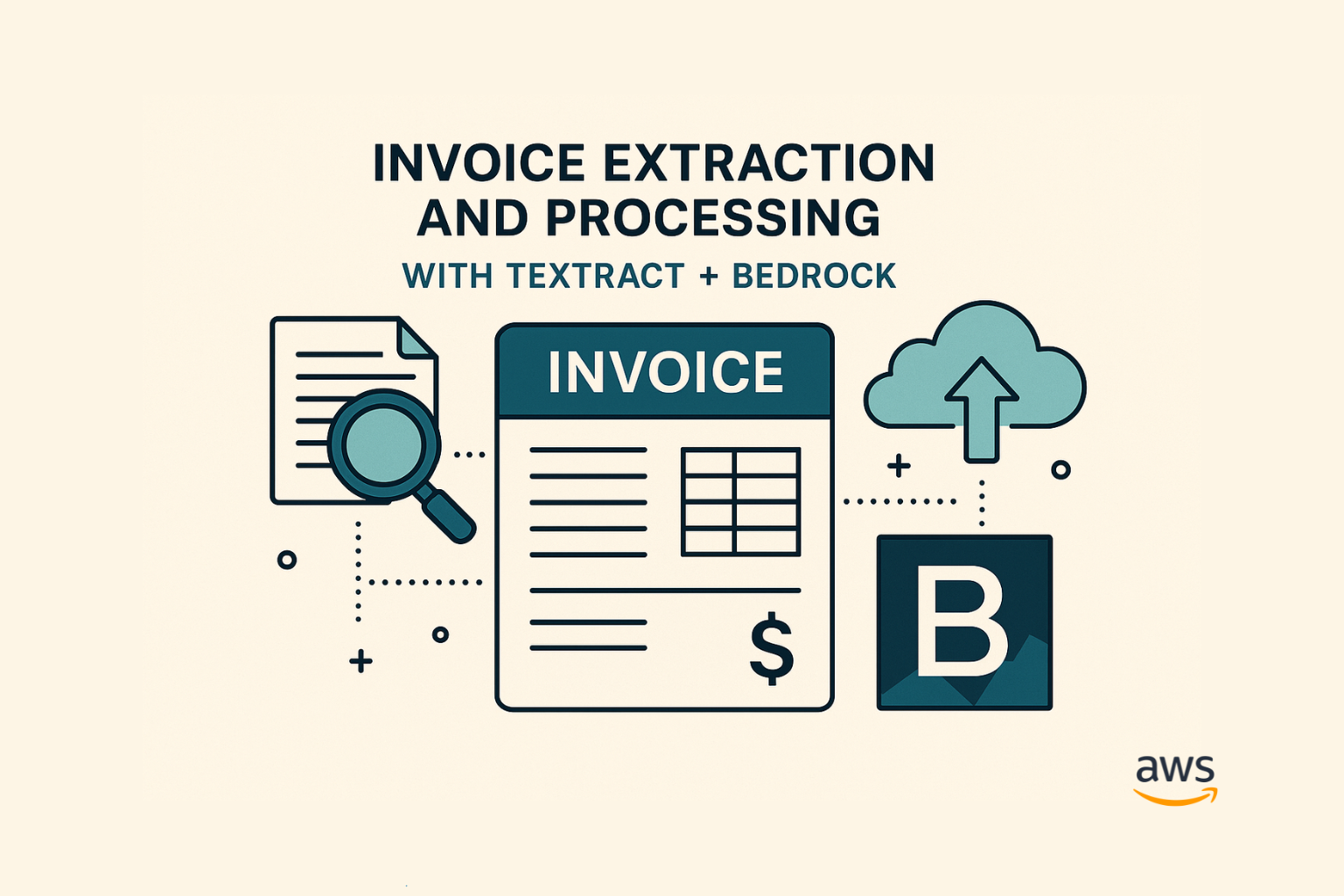

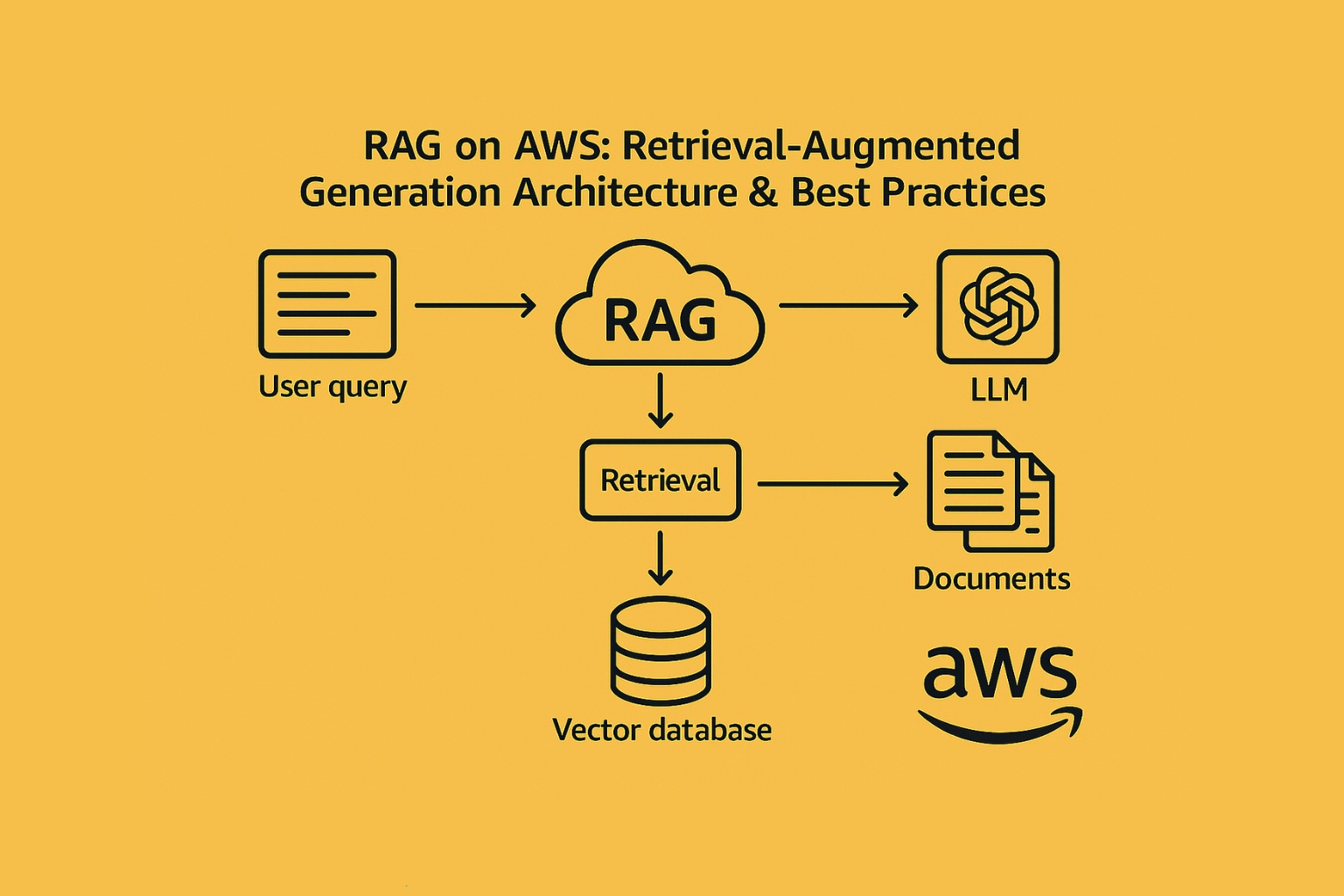

Step 3: Implement the Core Workflow (RAG Pattern)

Retrieval-Augmented Generation (RAG) allows your LLM to pull context from your own data, like a memory boost.

Architecture Flow:

- Upload a PDF or document to S3

- Extract text via Textract or use an external parser

- Generate vector embeddings (Titan or OpenAI-compatible)

- Store in OpenSearch index

- User enters query → system finds most relevant chunks

- Prompt is constructed using retrieved chunks → sent to FM (via Bedrock)

- Response is returned to the user via Lambda + API

Tools That Speed You Up

- LangChain with AWS Bedrock wrapper

- AWS Bedrock Agents (for managed orchestration, coming out of preview)

- Prompt templates via Jinja/JSON for structured chaining

- Streamlit or Next.js for lightweight UI layer

- IAM scoped roles for model invocation, OpenSearch access

Dev Tips

- Keep a prompt cache for debugging + optimization

- Log both inputs and model responses (w/ masking for PII)

- Use CloudWatch or X-Ray for tracing full flow

- Add basic retry logic to FM API calls, rate limits can spike under load

Example Output

User prompt:

“Summarize Clause 7 from the NDA uploaded last week.”

Response from Claude via Bedrock:

“Clause 7 outlines liability limitations, capping damages at $50,000 except in cases of gross negligence or breach of confidentiality.”

Conclusion

You don’t need a PhD or a dozen engineers to build your first GenAI app.

With AWS tools like Bedrock, OpenSearch, and LangChain, you can go from idea to MVP in under a week.

The key?

Don’t start with the model.

Start with the use case. Then build only what supports it.