Introduction

Generative AI isn’t hype anymore; it’s a practical, transformative layer across industries. AWS has rapidly evolved to offer a full-stack suite for teams looking to build, deploy, and scale GenAI applications.

If you’re exploring AWS for GenAI in 2025, this guide is your starting point.

What is the AWS GenAI Stack?

The AWS GenAI Stack is a layered ecosystem designed to support:

- Foundation model access (via Bedrock, SageMaker)

- Model fine-tuning & prompt orchestration

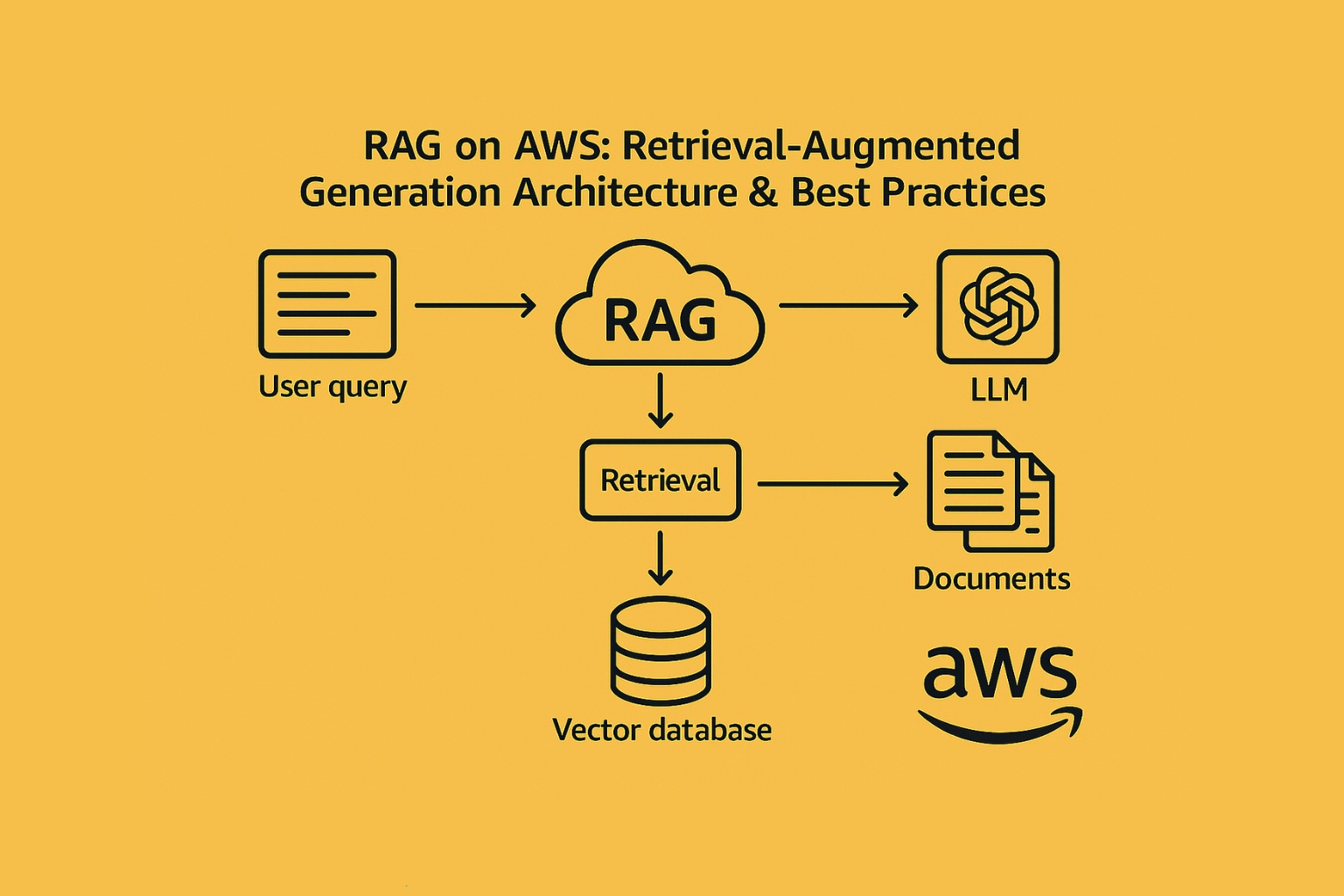

- Vector stores, embeddings, and RAG-based architectures

- Real-time deployment and monitoring

- Enterprise-grade security, scale, and compliance

- It’s modular by design, so you can plug in where you are, and scale as needed.

Core Components of the Stack

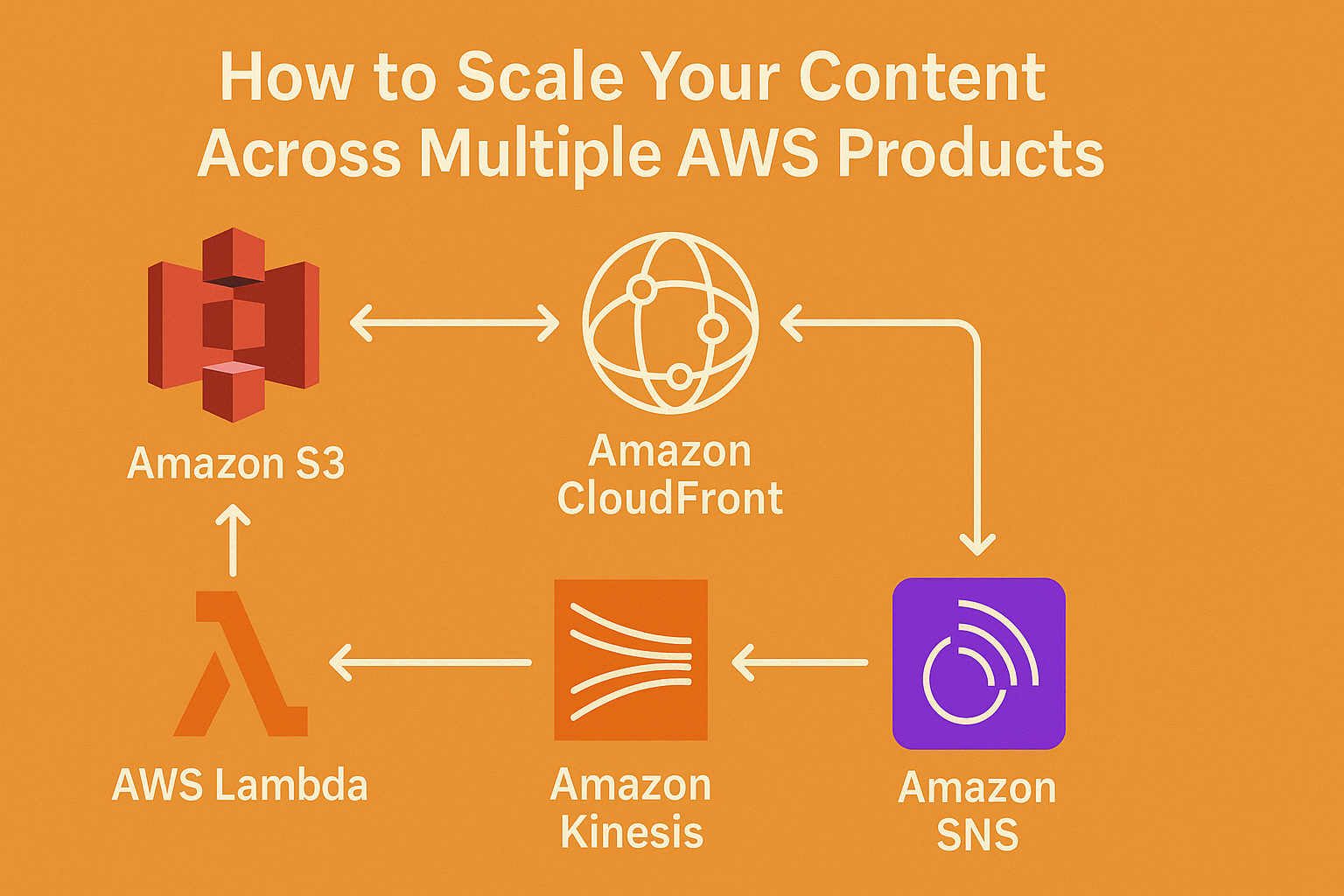

| Layer | AWS Services |

|---|---|

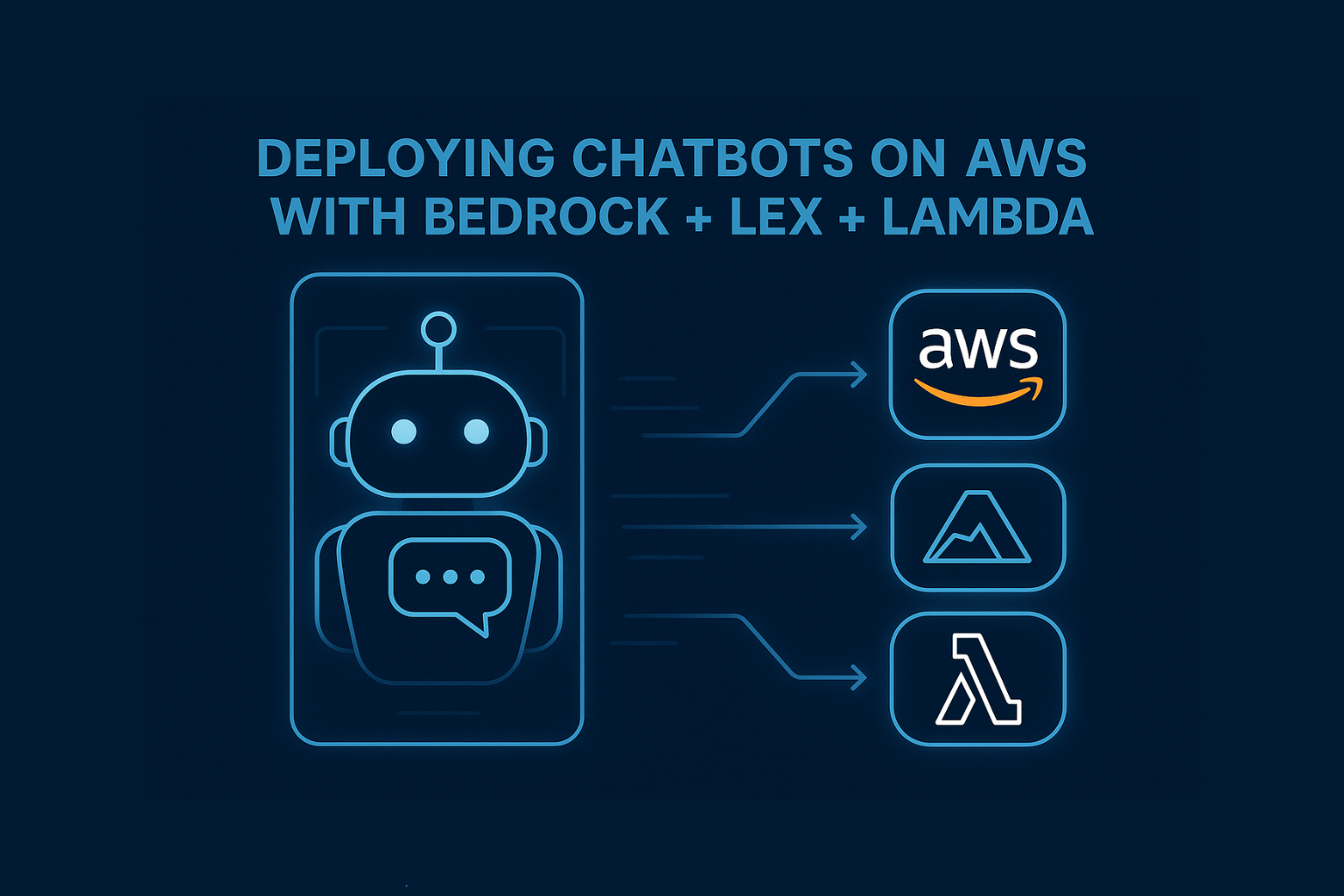

| Model Access | Amazon Bedrock (Titan, Claude, Jurassic-2), SageMaker JumpStart |

| Prompt Engineering / Orchestration | LangChain with Bedrock SDK, SageMaker Pipelines |

| RAG / Knowledge Integration | Amazon Kendra, OpenSearch + Bedrock |

| Embeddings & Vector Stores | Titan Embeddings, OpenSearch, RDS pgvector |

| Security + Governance | IAM, VPC, PrivateLink, Bedrock Guardrails, CloudWatch |

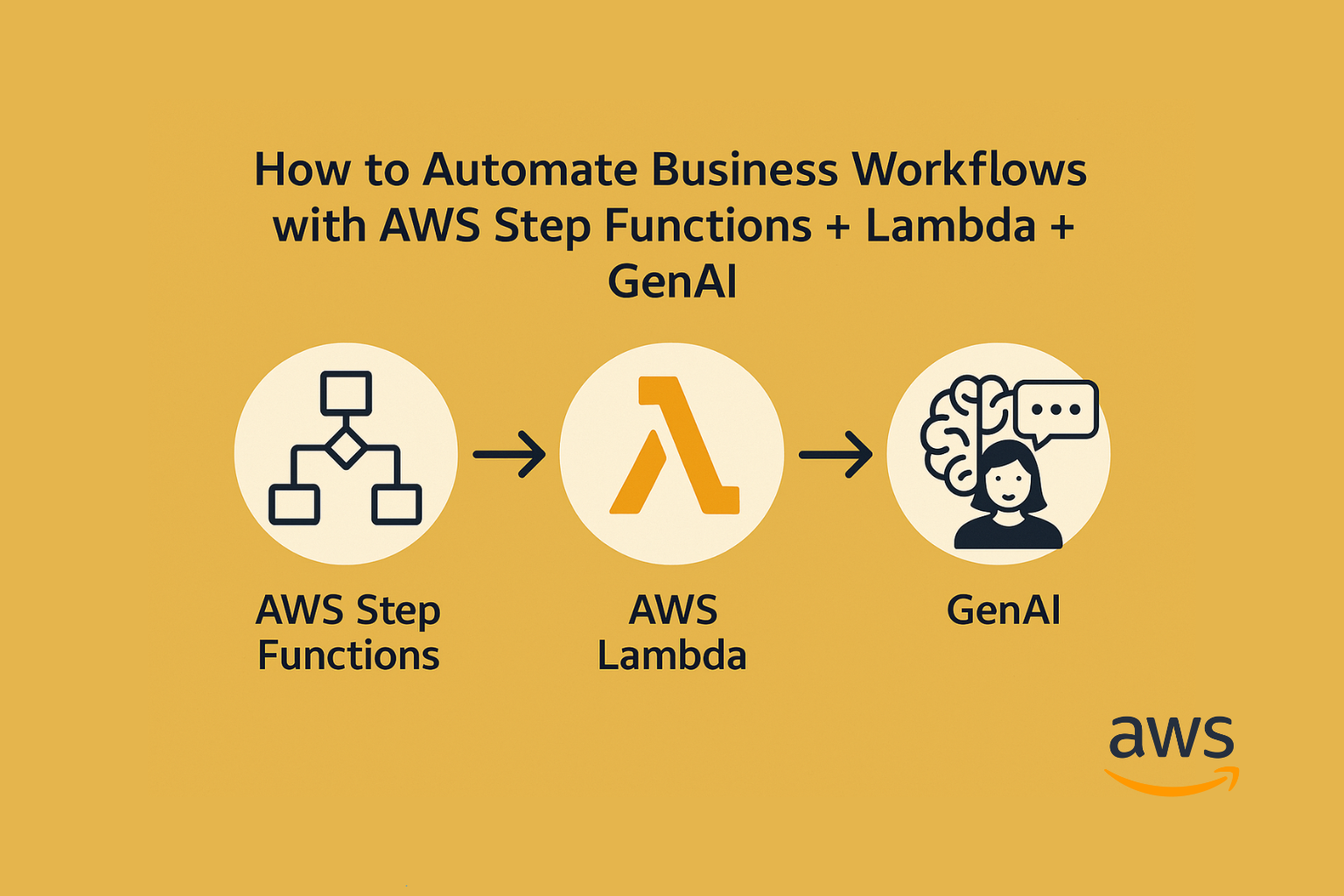

| Application Integration | Lambda, Step Functions, API Gateway, AppSync, Lex, S3 |

Tip: You don’t need to use every service; start with the business goal, then map it down.

What to Consider Before You Build

- Use Case Clarity: Internal automation? Customer-facing? Retrieval-based?

- Latency Needs: Do you need sub-second responses or batch summaries?

- Data Privacy & Region Locking: Use Bedrock’s private model invocation + region-specific APIs.

- Customization Strategy: Prompt templates vs fine-tuning, know when to choose which.

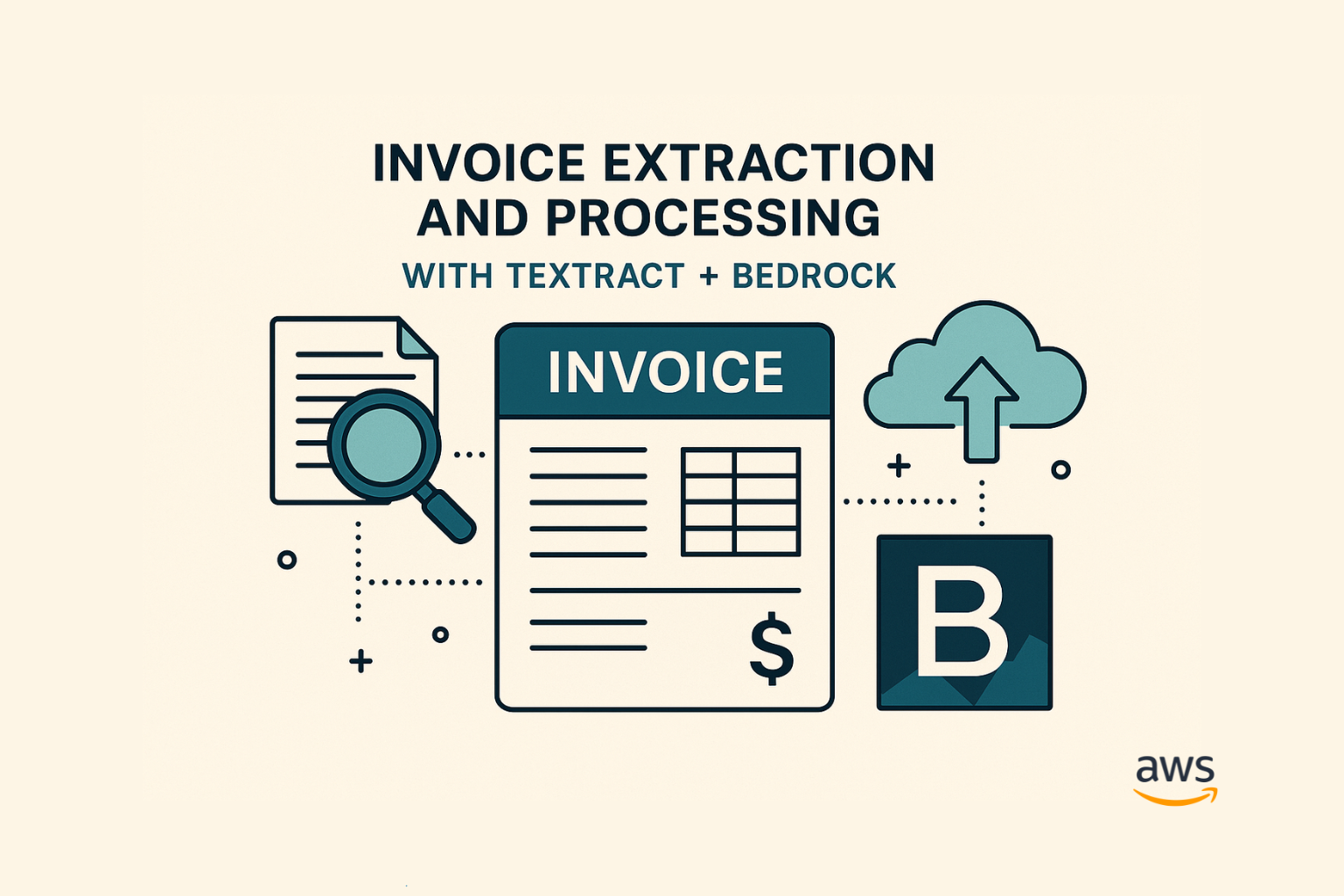

Example Starter Architecture (RAG App)

An internal chatbot that summarizes policy PDFs for HR teams:

- Uploads PDFs to S3

- Converts them via Textract

- Embeds them using Titan Embeddings

- Stores in OpenSearch

- Queries handled via Bedrock → Claude → Answer stream

- Total infra cost: <$150/month for 10K queries

AWS Tools to Explore

- Amazon Bedrock Studio (zero-code prototyping)

- LangChain + Bedrock SDK integration

- Titan vs Claude comparison

- IAM for GenAI applications

Conclusion

Getting started with AWS GenAI isn’t just about choosing a model. It’s about architecting trust, scale, and speed, without building from scratch.

Whether you’re automating internal ops or building the next ChatGPT-for-X, AWS gives you a modular path to go from idea → prototype → enterprise-grade application.