Introduction

For financial services companies, manual review is one of the biggest operational burdens, especially in areas like KYC (Know Your Customer), fraud detection, and compliance reporting.

In this case study, we explore how a mid-sized fintech firm used AWS’s GenAI stack to automate document review, reduce turnaround time, and improve accuracy.

Company Snapshot

- Industry: Fintech

- Size: 120 employees

- Use Case: Automate risk review of customer onboarding documents

- Region: US + APAC

- Pre-GenAI Problem: 45-minute average turnaround per application

- Post-GenAI Outcome: 70% reduction in manual review time, with 85% accuracy at triage stage

Problem Breakdown

Their onboarding pipeline included:

- Identity docs (IDs, proof of address)

- Income verification (pay slips, bank statements)

- Business incorporation docs (PDFs, scanned images)

Review required:

- OCR extraction

- Entity recognition

- Rule-based matching

- Flagging inconsistencies for manual escalation

It was handled by a 6-member operations team, until they decided to explore GenAI automation.

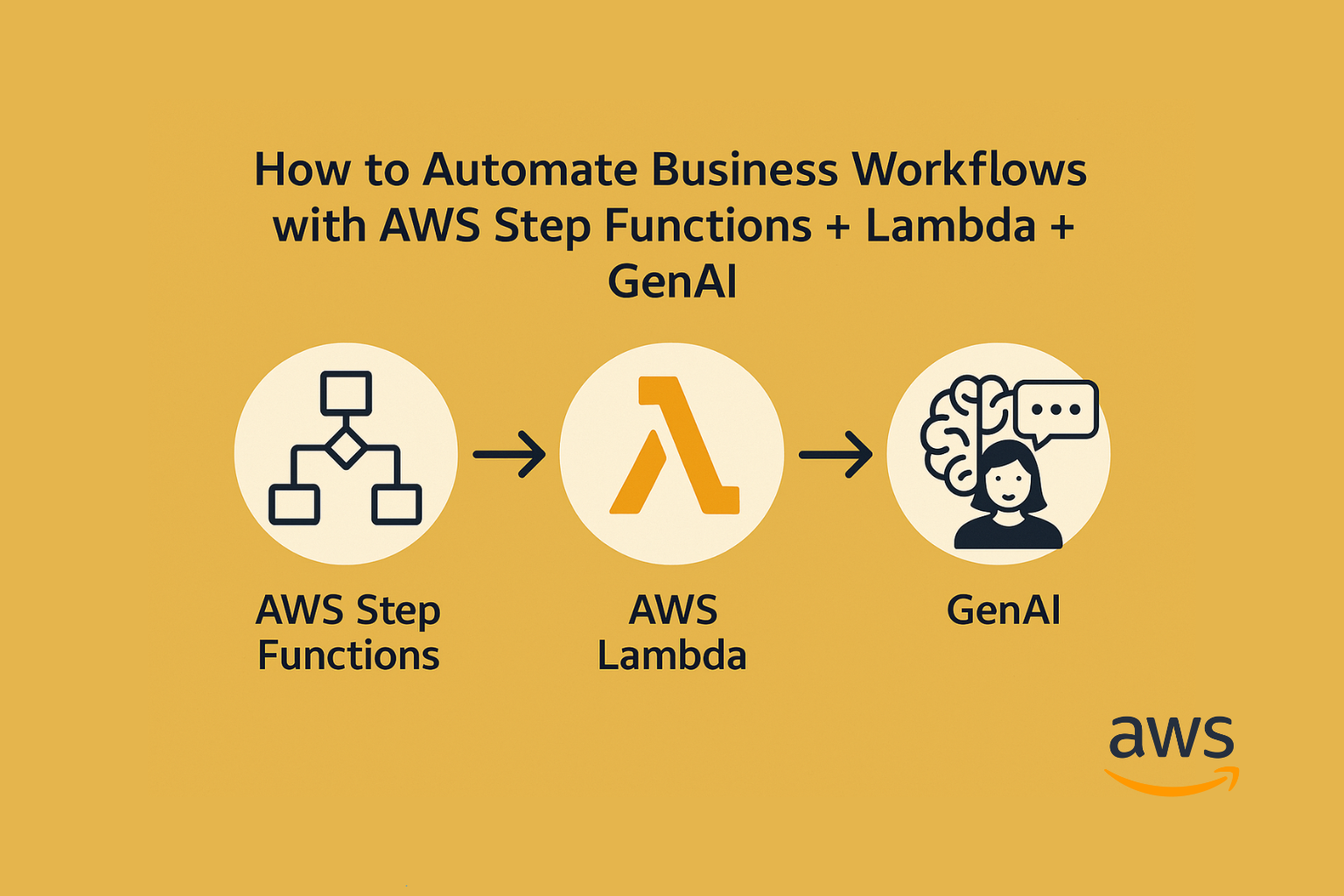

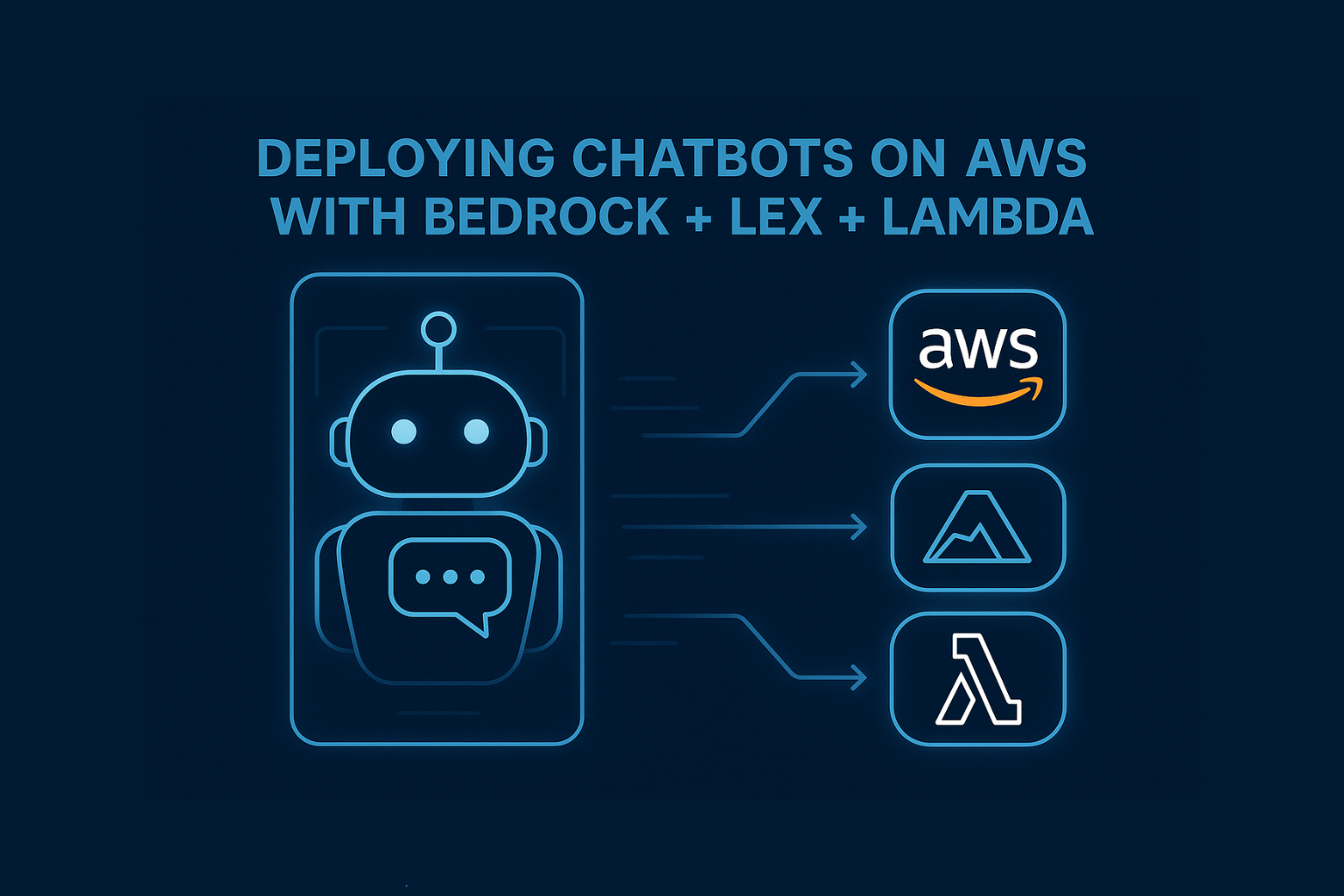

The GenAI Architecture They Built

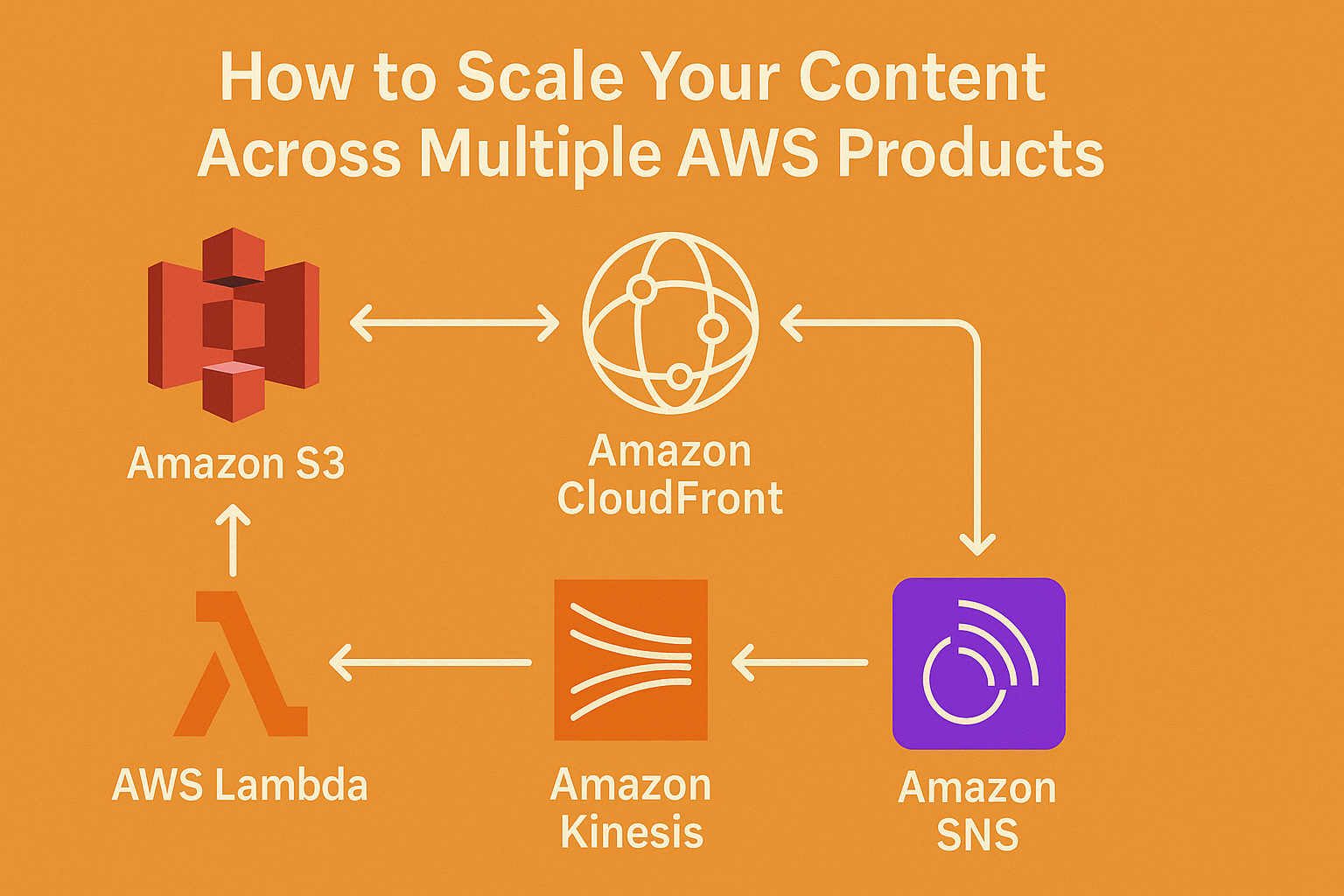

| Layer | AWS Service |

|---|---|

| Document Ingestion | S3 + Lambda |

| OCR Processing | Amazon Textract |

| LLM Reasoning | Amazon Bedrock (Claude via API) |

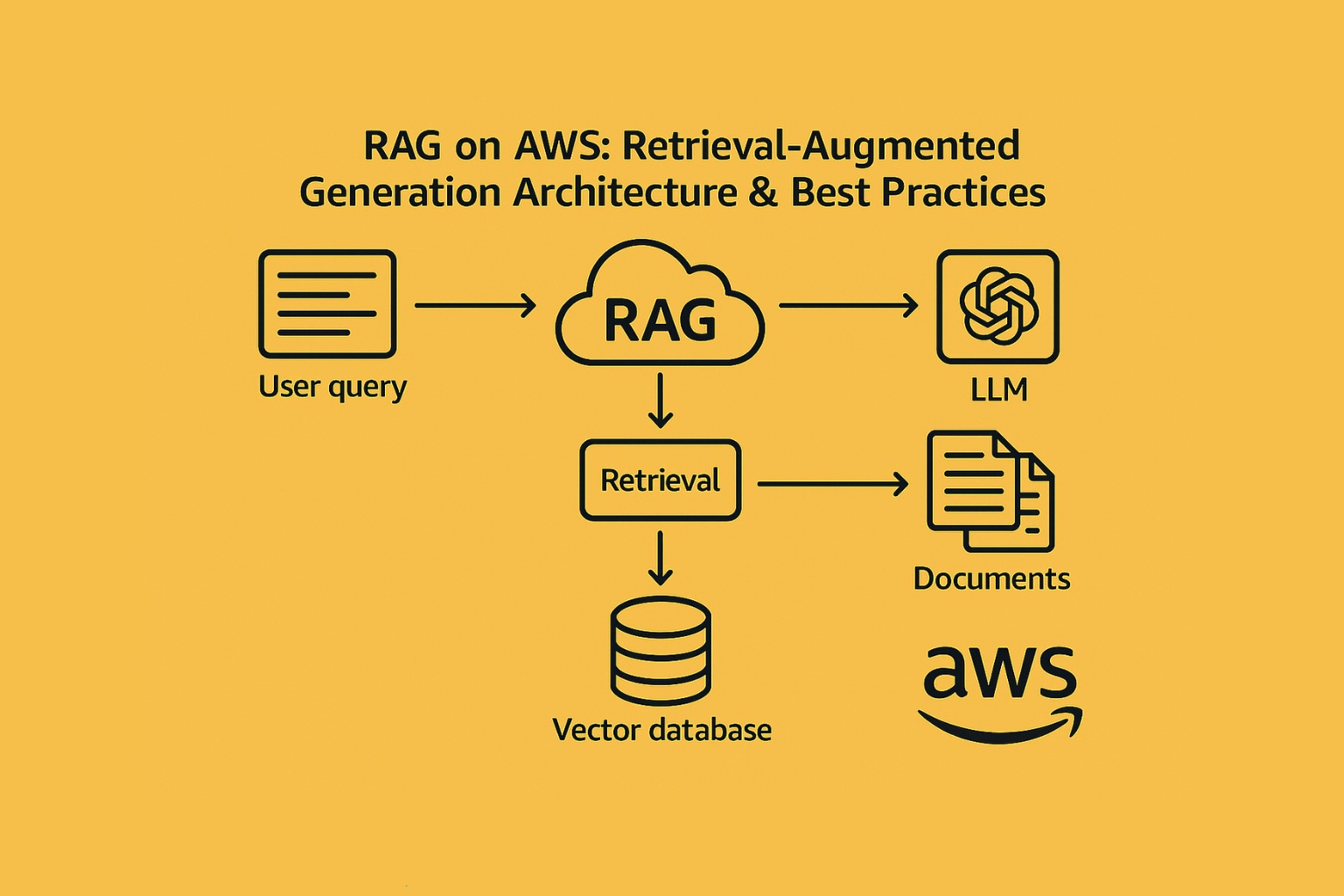

| Retrieval Layer | Titan Embeddings + OpenSearch |

| Workflow Orchestration | Step Functions + EventBridge |

| Compliance & Logs | KMS, CloudWatch, IAM scoped roles |

Sample Flow: Reviewing Proof of Income

- User uploads PDF to portal

- Lambda stores file in S3 → triggers Step Function

- Textract extracts salary fields

- Embeddings generated + stored for reference

- Prompt constructed:

- “Validate salary consistency across pay slips and bank statements. Highlight discrepancies.”

- Claude returns structured output:

json{

"monthly_salary_match": true,

"tax_code_consistency": "flag",

"risk_level": "low"

}

8. Output stored in DynamoDB → Ops team alerted only on red flags

Measurable Outcomes

| Metric | Before | After |

|---|---|---|

| Avg. review time | 45 mins | 13 mins (30 saved) |

| % auto-triaged | 0% | 72% |

| Escalation to ops | 100% | 28% |

| Accuracy at triage | N/A | 85% |

| Monthly cost | ~$11K ops | ~$4.2K AWS usage |

Why It Worked

- Used RAG (Retrieval-Augmented Generation) to match documents by client

- Created a feedback loop where ops team corrections improved prompt logic

- Kept the entire flow within VPC using PrivateLink + IAM

- Leveraged OpenSearch for embedded memory + fast search

- Applied custom prompt templates per document type

Lessons Learned

- Initial prompts were too verbose—led to token overages

- Textract required post-cleaning for tabular data

- Segmented templates by document type improved response quality

- Claude 3 (preview) handled multi-column logic better than v1

Tools They Recommend

- PromptLayer for prompt logging

- LangChain for chaining and fallback handling

- CloudTrail + Cost Explorer for usage and budget alerts

- Bedrock Guardrails to enforce a “no hallucination” policy

Conclusion

This fintech team didn’t reinvent their workflow; they enhanced it with GenAI, using AWS-native tools to remove repetitive review work and accelerate compliance decisions.

The result? Less manual effort, faster onboarding, better audit trails, and a more scalable ops model.

Whether you’re in finance, insurance, or healthcare, this case proves that with the right LLM architecture, retrieval strategy, and workflow integration, GenAI isn’t just hype; it’s a measurable impact.