Introduction

Amazon Titan is AWS’s own family of foundation models, offered via Amazon Bedrock.

Unlike OpenAI or Anthropic models, Titan is designed to be enterprise-first, cost-efficient, and natively integrated with AWS services.

But it’s not a silver bullet for every GenAI problem.

In this post, we’ll break down:

Where Titan models shine

Where they fall short

And how to decide if they’re right for your use case

What Is Amazon Titan?

Titan is AWS’s in-house suite of foundation models, currently available via Amazon Bedrock.

As of 2025, Titan includes:

- Titan Text Express: General-purpose text generation

- Titan Embeddings: Converts text into numerical vectors

- Titan Image Generator (Preview): Text-to-image model

- Titan Text Lite (Coming soon): Lightweight, low-latency LLM

All Titan models run within your AWS environment, supporting VPC, encryption, and compliance controls out of the box.

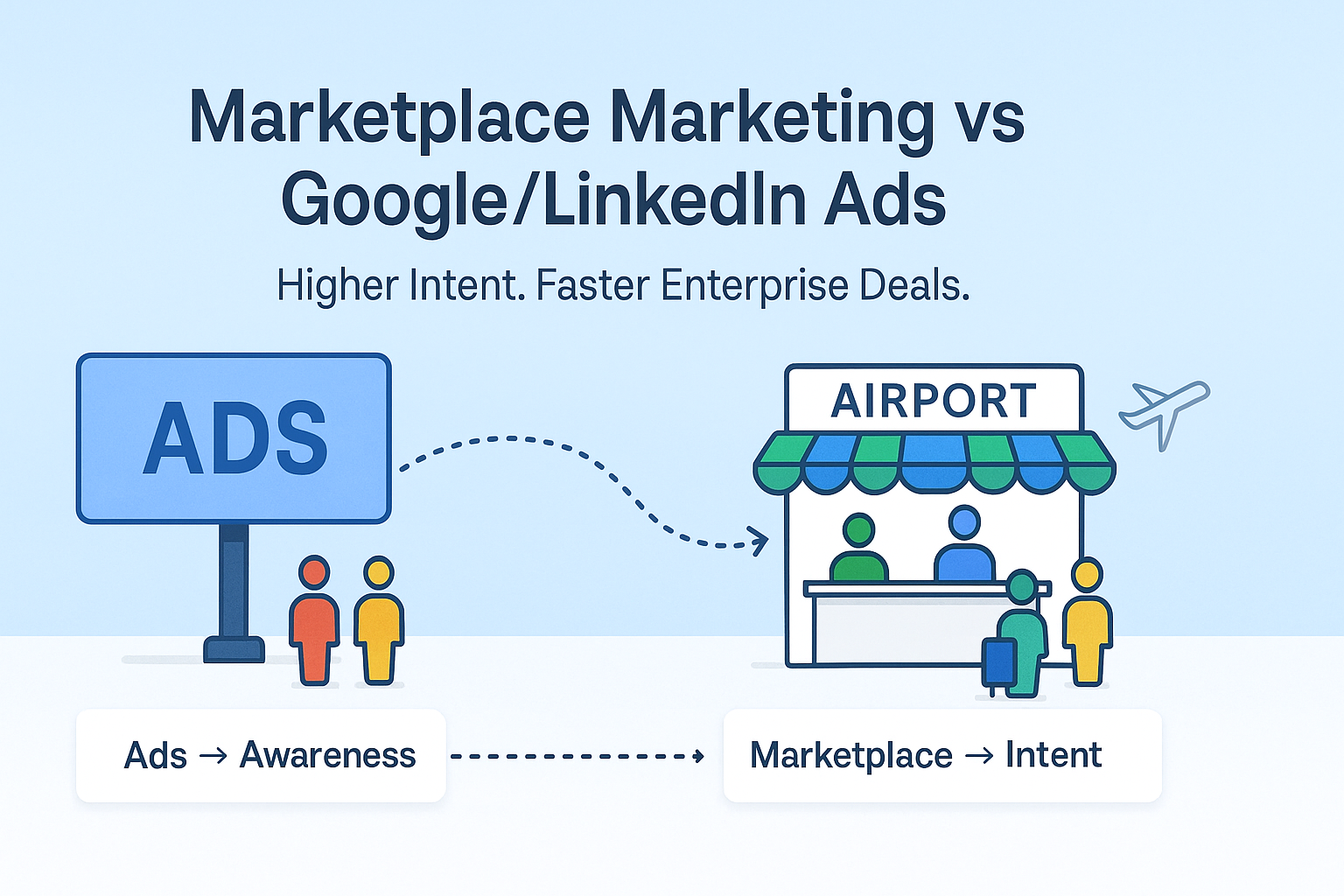

Strengths of Amazon Titan

| Feature | Why It Matters |

|---|---|

| Enterprise Security | Full support for IAM, VPC access, encryption at rest/in-transit |

| No Data Leakage | Prompts and outputs are not used for training |

| Solid Base Performance | Titan Text Express is comparable to GPT-3.5 for many tasks |

| Great Embeddings Quality | Titan Embeddings are AWS-optimized and integrate natively with OpenSearch |

| Lower Cost | More predictable pricing than OpenAI models in many workloads |

| Fast Latency in AWS Regions | Bedrock automatically routes Titan requests regionally |

| Custom Guardrails Support | Easy to integrate tone, topic, and output safety limits via Bedrock’s policy layer |

Limitations of Titan (as of mid-2025)

| Limitation | Impact |

|---|---|

| Fewer model variants | No fine-tuned specialist versions like GPT-4 Turbo or Claude 3.5 yet |

| Smaller community support | Fewer prompt libraries, eval benchmarks, or tutorials |

| Weaker on creative generation | Titan is precise but less creative in storytelling or ideation |

| Model weights not open | You can’t self-host Titan outside of Bedrock |

| Limited multi-modal support | Image and speech support is still preview-only |

Ideal Use Cases for Titan Models

Use Titan when you need:

- Reliable summarization, classification, and rewriting

- High-speed inference with AWS-native routing

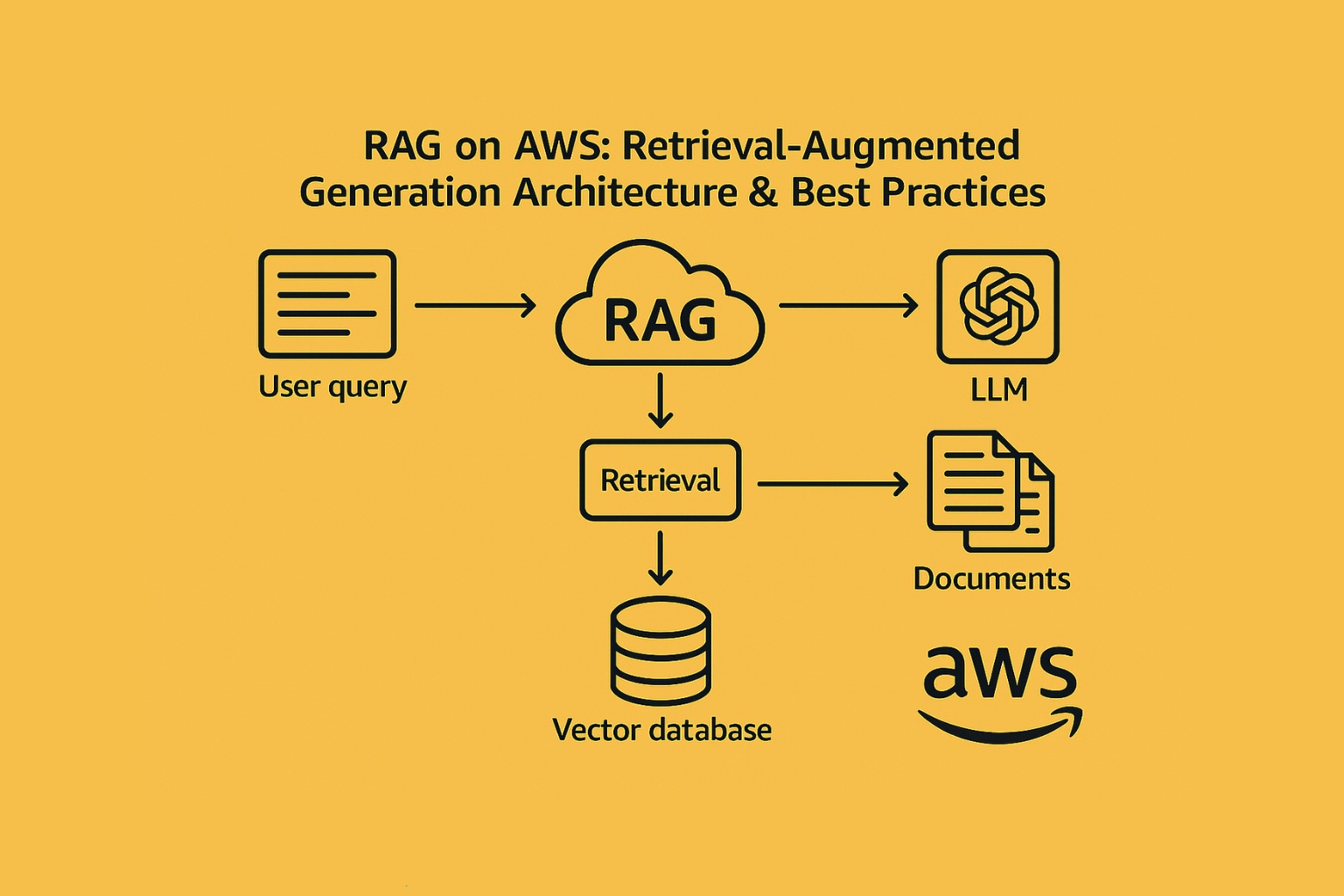

- Secure embedding generation for RAG pipelines

- Industry-safe tone control via guardrails

- Predictable cost for scale-sensitive GenAI apps

Examples:

- B2B SaaS summarizers

- Knowledge base Q&A bots

- Contract clause extraction

- Support ticket routing

- Vector search in regulated data

When NOT to Use Titan

Avoid Titan when you need:

- Cutting-edge reasoning (e.g., multi-step chain-of-thought)

- Ultra-creative storytelling or marketing copy

- Multi-modal generation (text + image/audio)

- Open-weight models for offline use

- Real-time agents that need flexible function calling + tools

In those cases, consider:

- Anthropic Claude 3 (better for multi-turn conversations)

- Meta LLaMA 2/3 via SageMaker (open weights)

- GPT-4 via Azure if multi-modal creativity is key

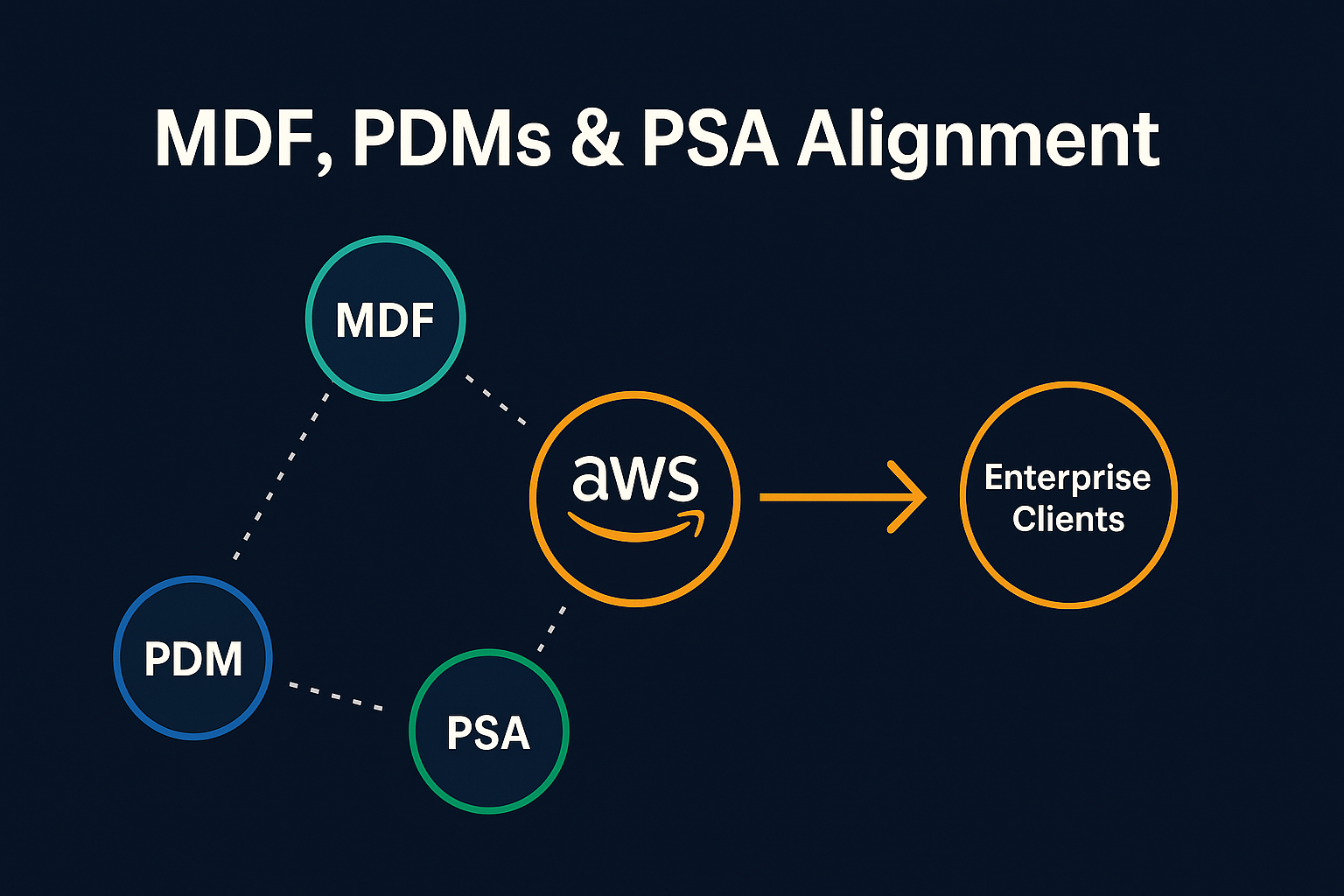

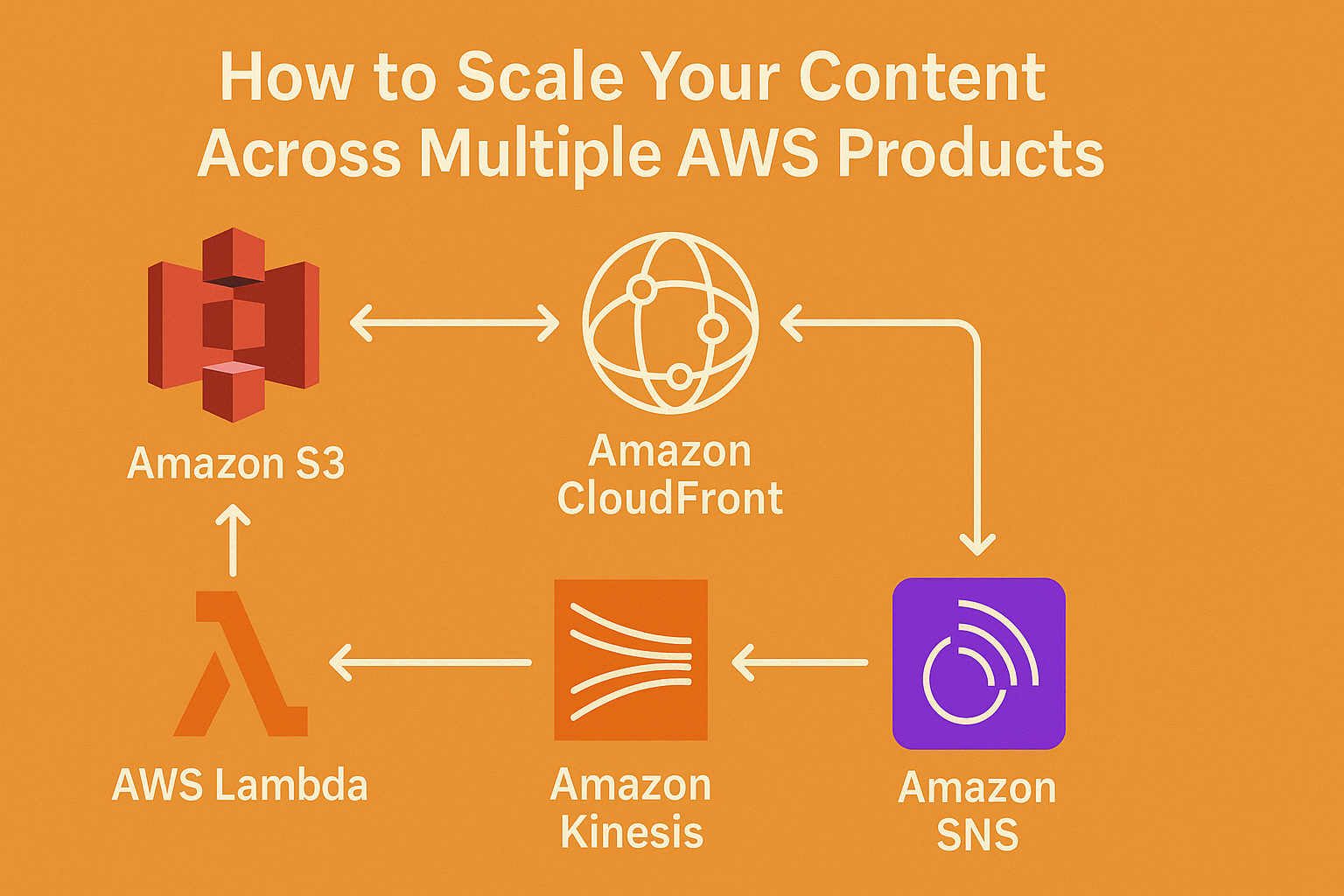

Titan in the Bigger AWS GenAI Stack

Titan pairs naturally with:

- OpenSearch (for vector search via Titan Embeddings)

- Kendra (for hybrid search + Q&A)

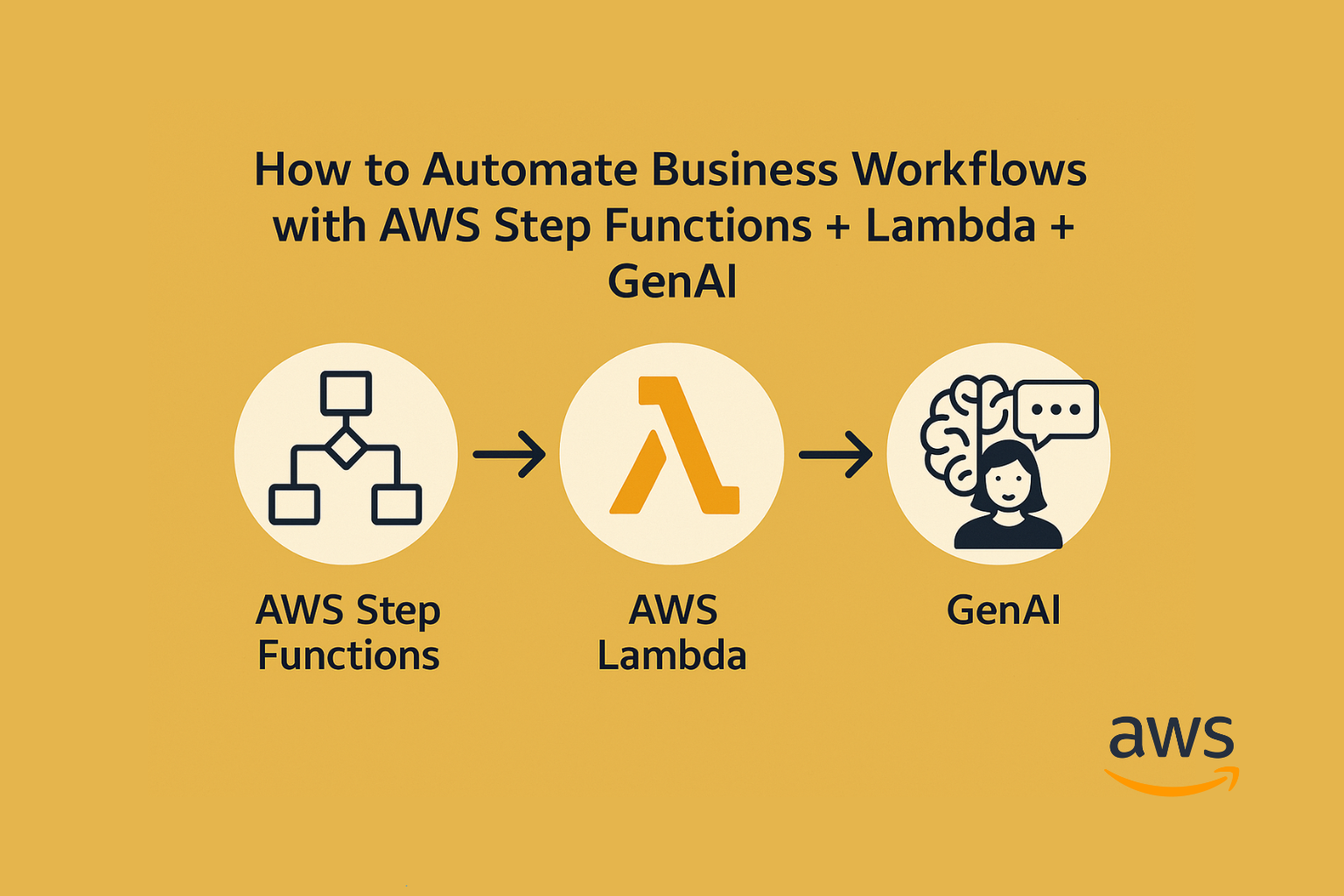

- Step Functions (for chain-of-prompts)

- Amazon Lex (for chatbot use cases)

- Guardrails for Bedrock (for safe content control)

Conclusion

Titan isn’t the loudest GenAI model out there—but it’s one of the most enterprise-friendly, efficient, and secure.

Use it when you need:

- Reliability over flair

- Security over virality

- Simplicity over complexity

In short: Titan is what GenAI looks like when AWS builds it for AWS customers.