Introduction

DevOps thrives on automation. But as environments grow more complex, static automation rules can create just as much friction as they remove.

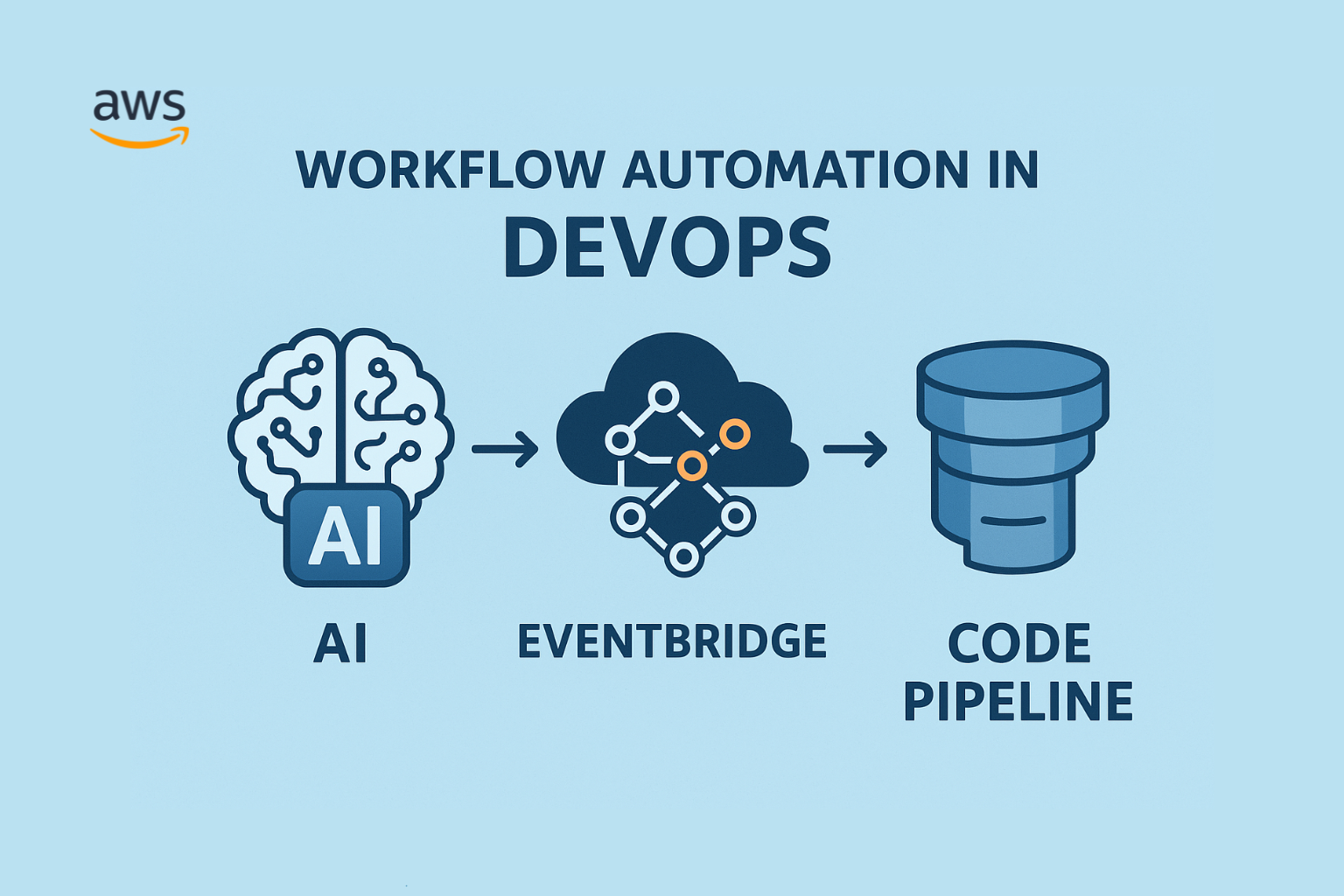

AWS already gives us powerful native automation tools like EventBridge and CodePipeline.

Now, by integrating AI into that workflow, you can build adaptive pipelines; pipelines that change behavior based on context, risk level, and past outcomes.

This post explores how to combine AI with EventBridge and CodePipeline to create pipelines that aren’t just automated… they’re intelligent.

The Challenge with Traditional Pipelines

Standard CI/CD workflows trigger the same build-test-deploy cycle every time:

pgsql

Commit code → Run all tests → Deploy → Notify team

It’s predictable, but not efficient.

Problems you’ve likely seen:

- Wasted builds on low-risk changes (e.g., typos, docs updates).

- Delayed deployments for urgent fixes that get stuck in long approval queues.

- Reactive rollbacks after a production failure instead of proactive prevention.

Adding AI Into the DevOps Flow

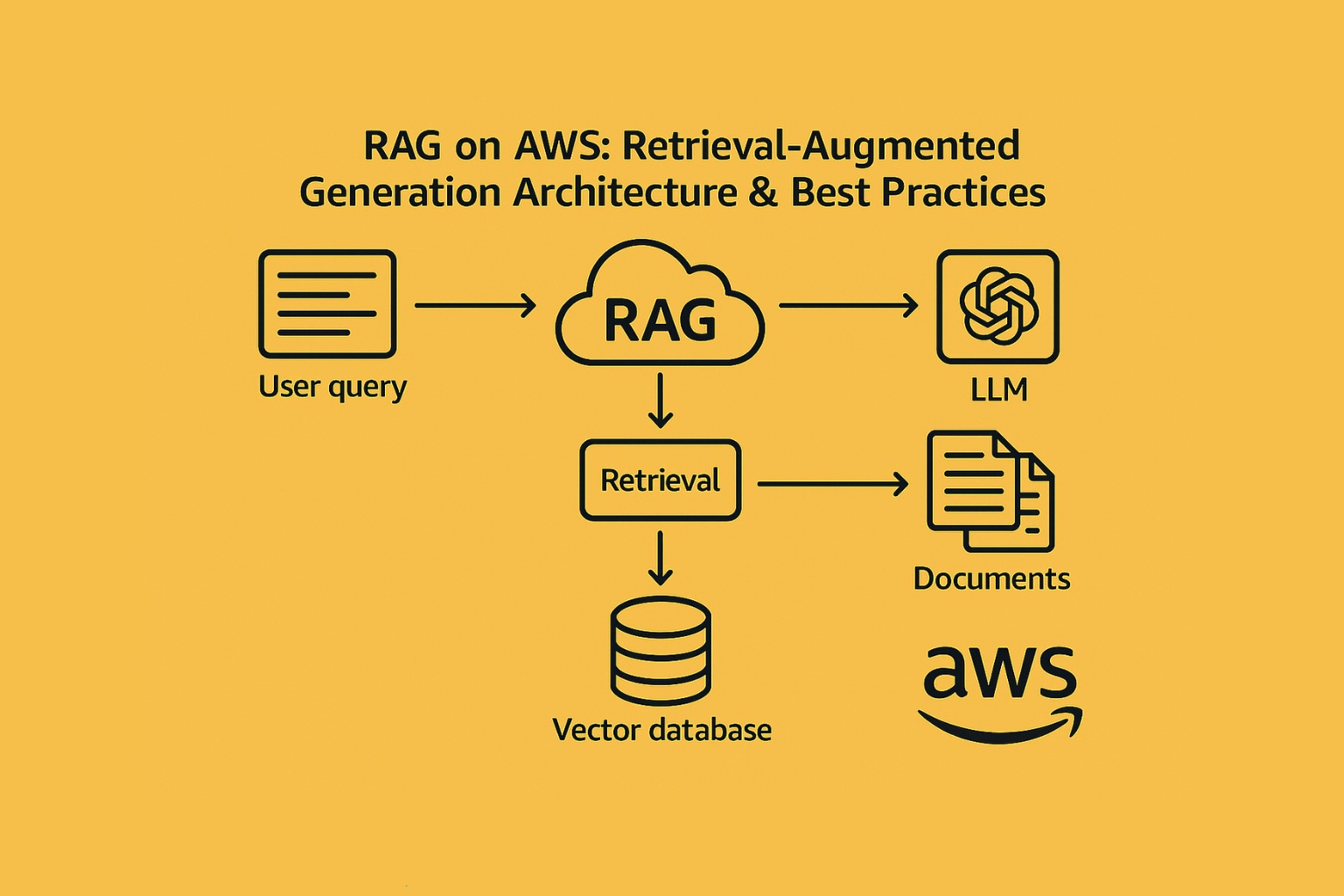

By inserting AI decision points between EventBridge triggers and CodePipeline actions, you can create a dynamic automation loop.

Example: AI-Driven Pipeline Flow

- Event Trigger:

- EventBridge catches a commit, PR merge, or alert from CloudWatch/CodeCommit.

- AI Risk Scoring:

- A Lambda function sends commit metadata, code diffs, and test history to an AI model (Bedrock/SageMaker).

- Model outputs a risk score: low, medium, or high.

- Conditional Pipeline Execution:

- Low risk: Skip full regression suite, deploy straight to staging.

- Medium risk: Run targeted tests, deploy with automated approvals.

- High risk: Full CI/CD run with mandatory human review.

- Post-Deployment AI Monitoring:

- EventBridge triggers anomaly detection on logs/metrics using AI.

- If anomalies are detected, CodePipeline triggers rollback automatically.

Benefits of AI + EventBridge + CodePipeline

| Benefit | Traditional Pipeline | AI-Enhanced Pipeline |

|---|---|---|

| Build Time | Same for all commits | Shorter for low-risk changes |

| Deployment Speed | Static approvals | Dynamic, context-based approvals |

| Cost Efficiency | Higher due to redundant tests | Reduced compute waste |

| Incident Response | Reactive | Proactive rollback triggers |

| Team Focus | Manual decision-making | AI handles routing logic |

Implementation Blueprint

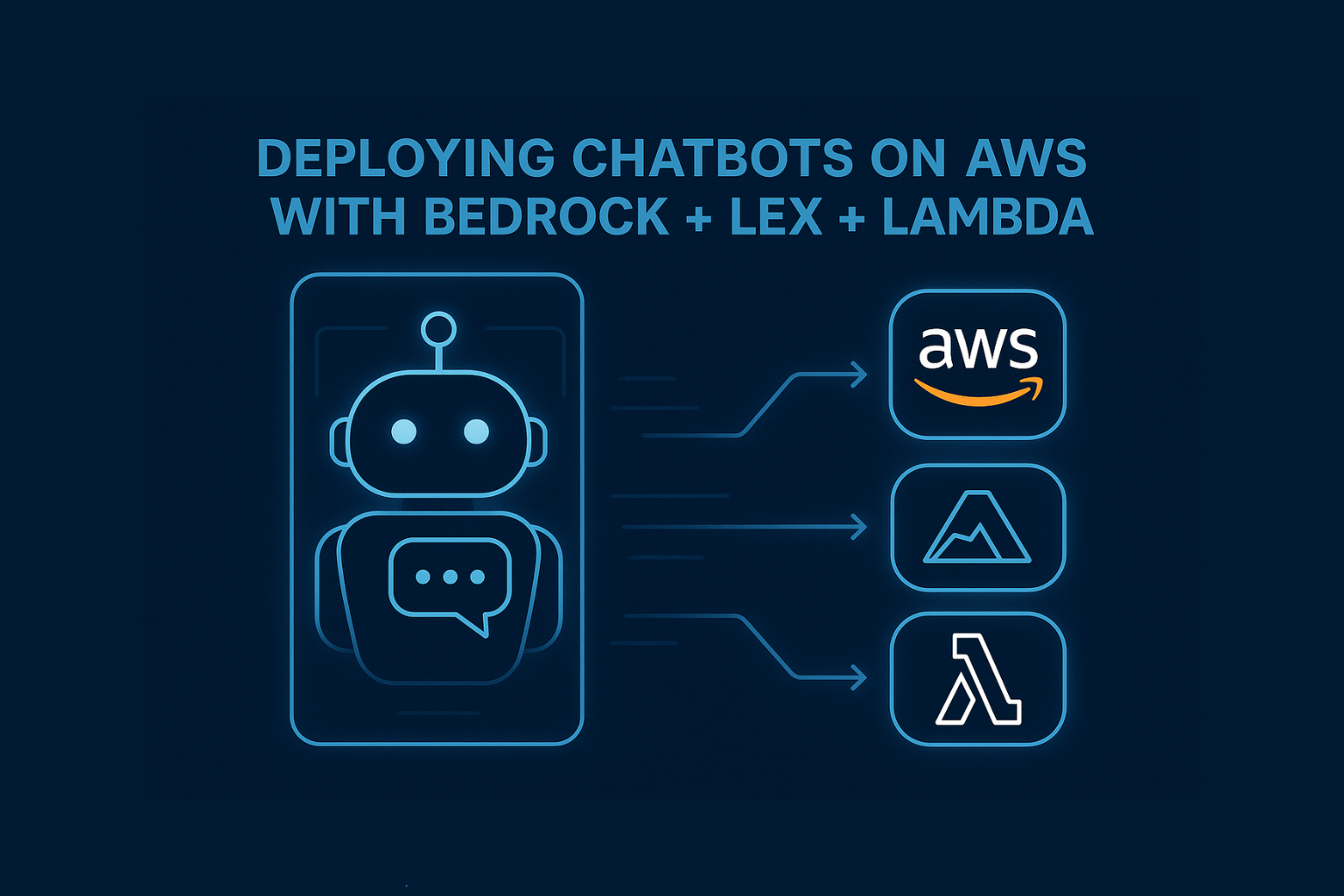

Step 1:

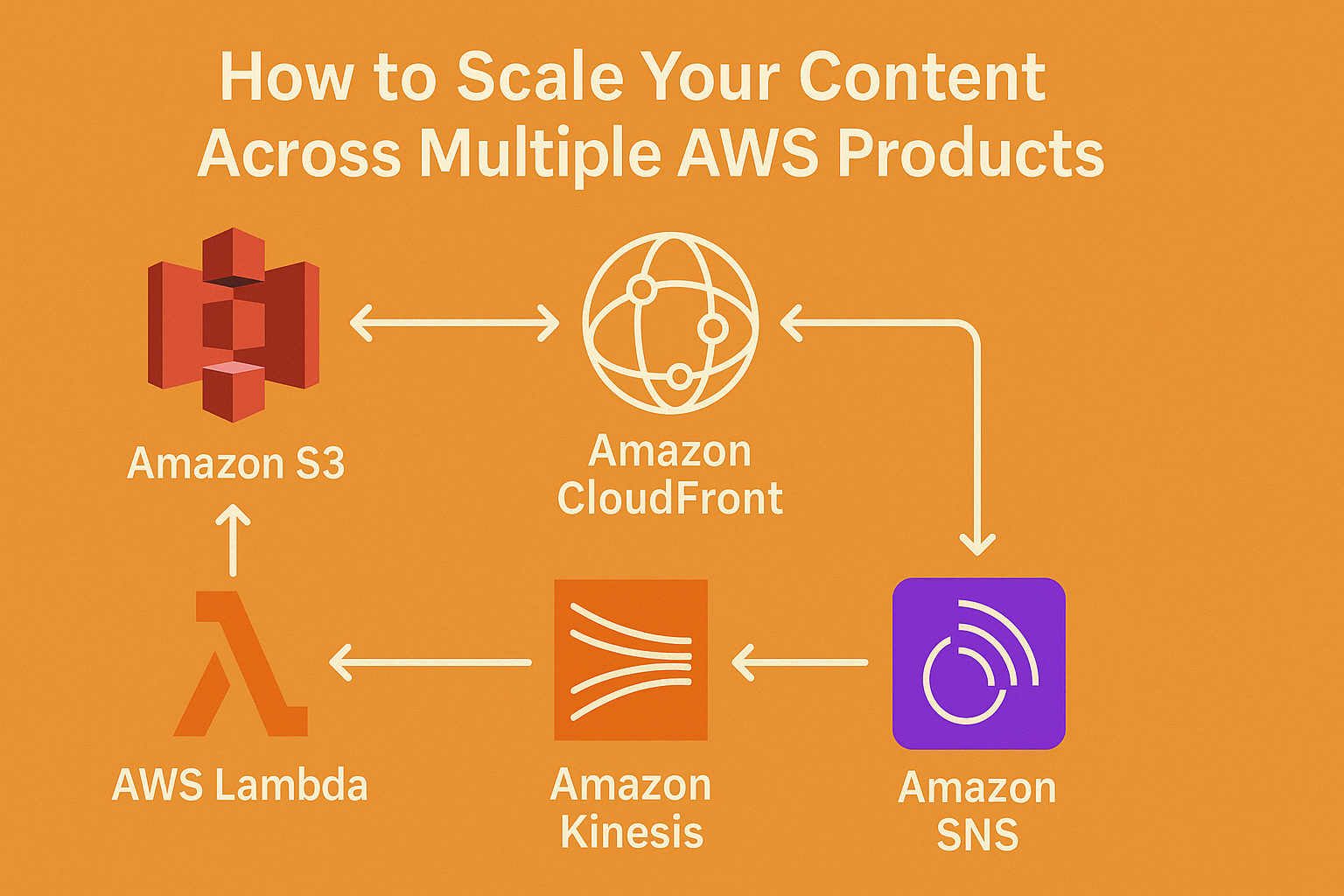

Define event sources in EventBridge (CodeCommit, GitHub, Jira, CloudWatch, etc.).

Step 2:

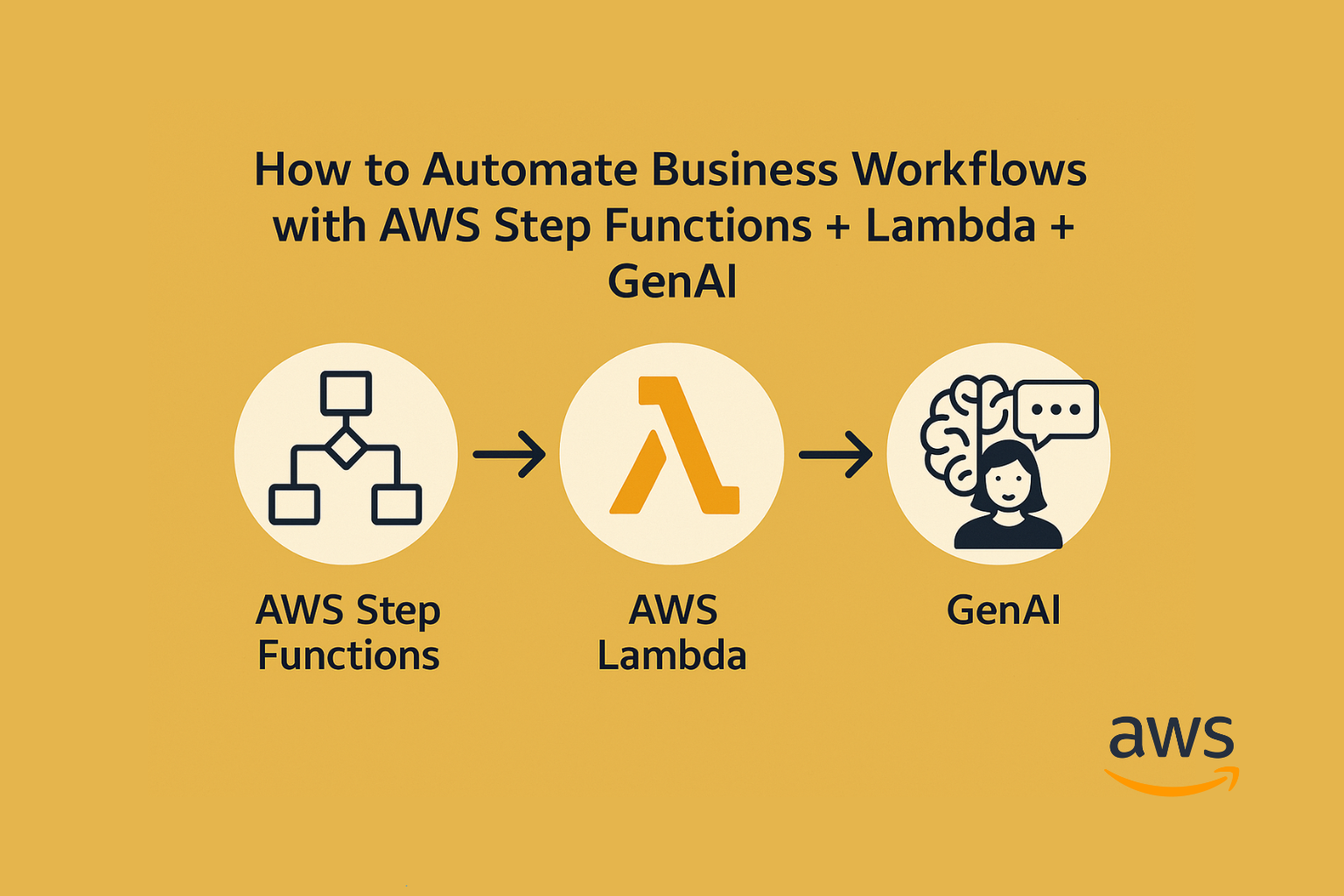

Create a Lambda function that:

- Ingests event payload.

- Calls AI model endpoint for scoring.

- Decides pipeline route.

Step 3:

Set up multiple CodePipeline execution paths:

- Full pipeline

- Partial test + deploy

- Approval-first deployment

Step 4:

Integrate AI-based log/metric analysis post-deploy via CloudWatch → EventBridge → Lambda rollback trigger.

Pro Tips

- Use Amazon Bedrock for managed AI inference, no model hosting required.

- Store AI decisions in DynamoDB for auditing and fine-tuning logic.

- Start with rule-based thresholds, then let AI learn from historical outcomes.

- Keep a manual override path, especially for production deploys.

Conclusion

By combining AI with EventBridge and CodePipeline, you move beyond “automated” DevOps into adaptive DevOps, where your pipeline evolves in real-time based on the change, the risk, and the impact.

That means:

- Faster safe deployments.

- Fewer late-night rollbacks.

- More time for engineers to focus on building instead of babysitting builds.