Introduction

In 2025, GenAI is no longer limited to just words on a screen.

From images to text, audio to documents, multi-modal models are now shaping how we interact with AI-powered applications.

So, where does AWS stand in this multi-modal future?

Let’s explore what’s possible right now on AWS when it comes to multi-modal GenAI and how to build with it.

What Are Multi-Modal Models?

Multi-modal models can accept or generate multiple types of data, such as:

- Text

- Images

- Audio

- Video

- PDFs or structured documents

The goal?

Enable richer, more natural input-output flows in AI apps.

Multi-Modal Options on AWS (2025)

| Capability | AWS Service / Integration |

|---|---|

| Text + PDF Understanding | Amazon Textract + Bedrock (RAG) |

| Text-to-Image Generation | Amazon Titan Image Generator (Preview), Stability.AI via SageMaker |

| Text + Image Q&A | Anthropic Claude 3 (via Bedrock, limited preview) |

| Visual Search / Image | Embeddings SageMaker + CLIP or ViT models |

| Speech-to-Text | Amazon Transcribe |

| Text-to-Speech | Amazon Polly |

| Multi-modal Fine-tuning | SageMaker Training Jobs with HuggingFace libraries |

AWS doesn’t have one multi-modal API, but you can combine services to build your own pipeline.

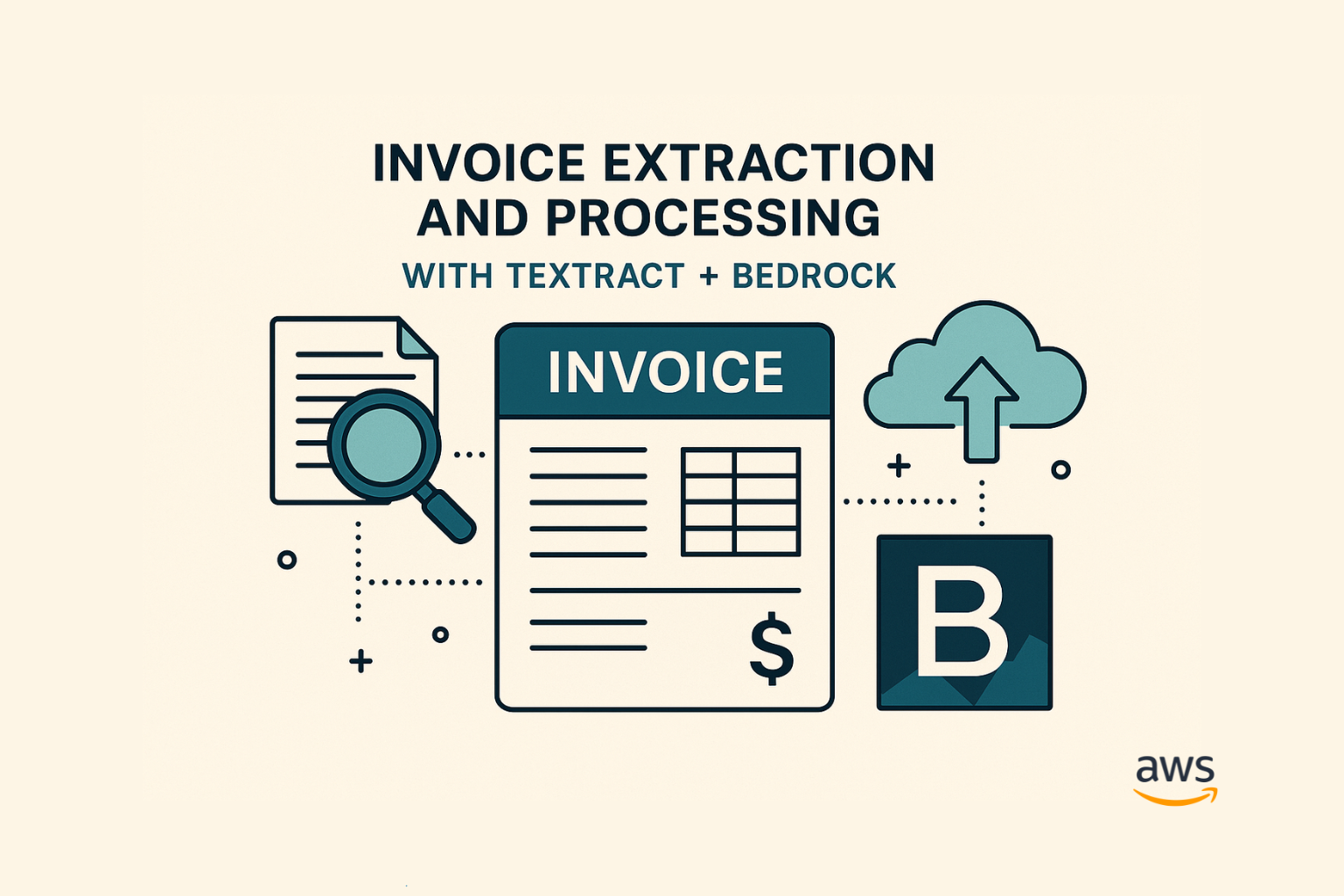

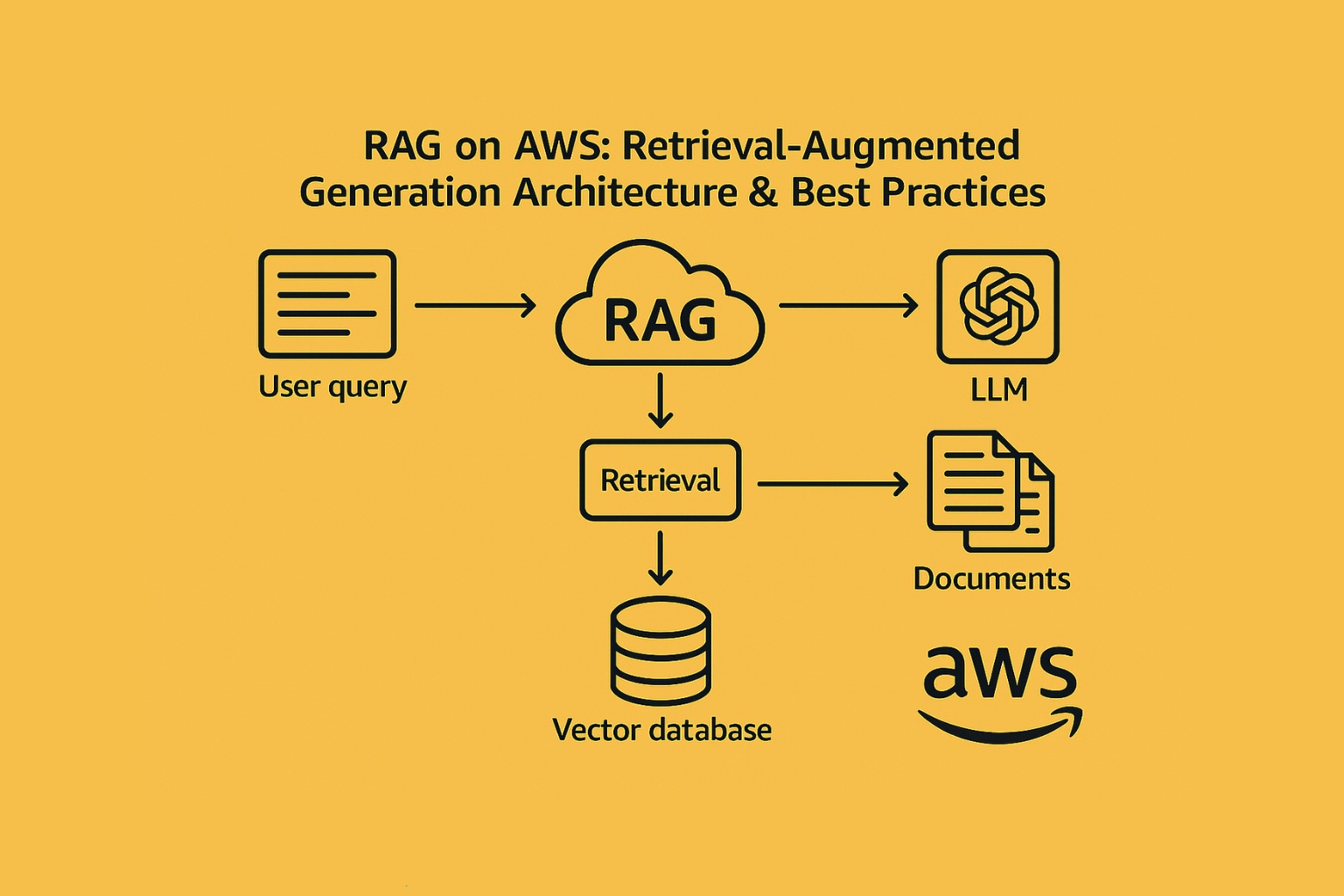

Example: Building a Document Q&A Assistant with Multi-Modal Input

Goal: Let users upload a scanned PDF and ask questions about it.

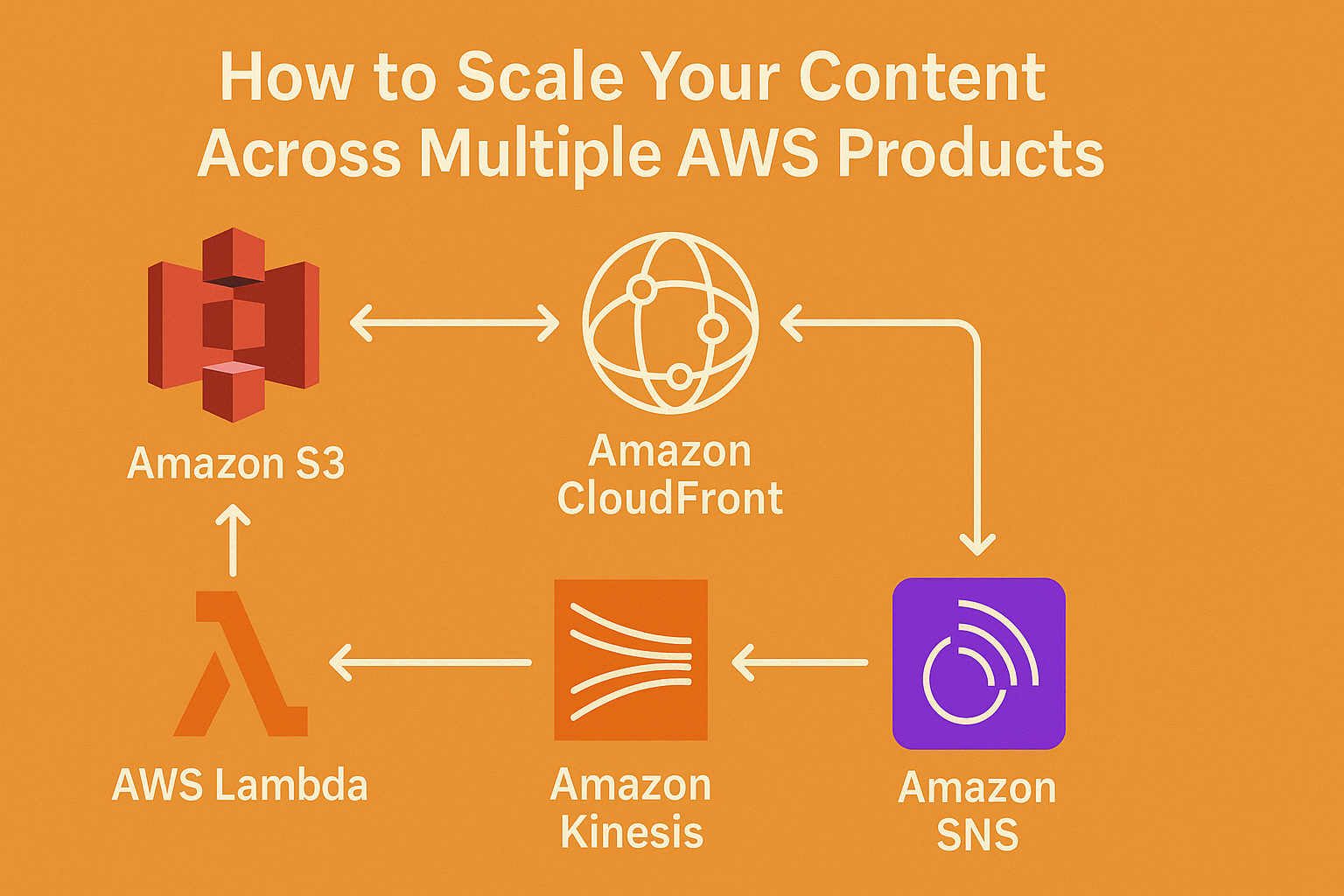

Architecture:

- User uploads scanned PDF → stored in S3

- Text extracted using Amazon Textract

- Text chunked + embedded using Titan Embeddings

- Chunks stored in OpenSearch

- User asks a question via UI → Bedrock (Claude or Titan) retrieves context → responds

- Response delivered in UI (or via Amazon Polly as voice)

- Text → Image → Vector → Response

- Entirely on AWS

Other Multi-Modal Use Case Ideas

| Use Case | AWS Stack |

|---|---|

| AI Form-Filling Agent | Textract + Lambda + Bedrock |

| AI Product Designer | Titan Image Generator + SageMaker Studio |

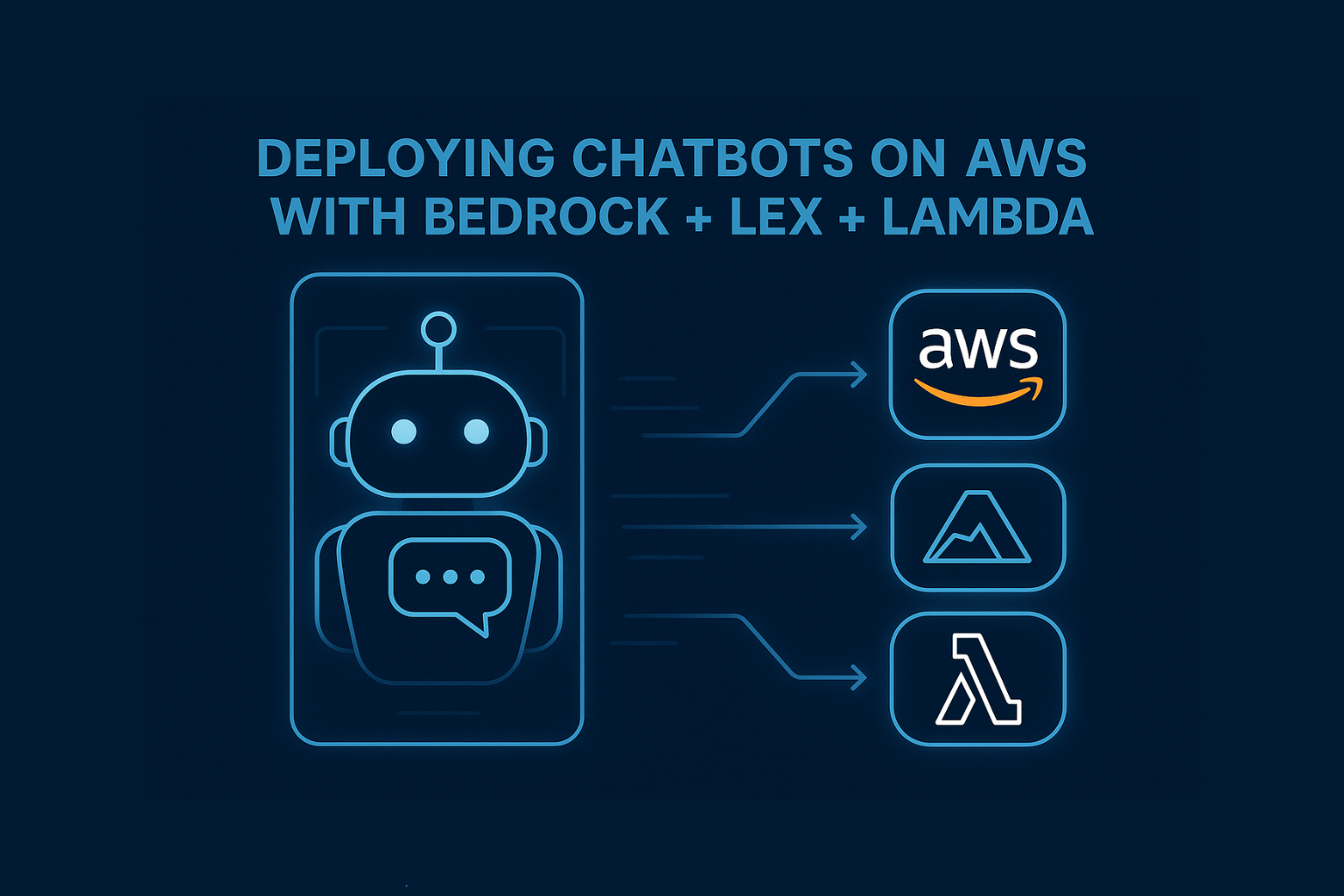

| Voice-Controlled FAQ Assistant | Transcribe + Lex + Bedrock |

| Visual Search Engine | SageMaker + ViT embeddings + OpenSearch |

| Contract Review Bot (PDF → Summary) | S3 + Textract + Bedrock + Guardrails |

Current Limitations

- Claude 3’s image input support is limited in Bedrock preview

- Titan Image Generator is still in preview with basic capabilities

- AWS does not offer one-click video or audio processing LLMs yet

- Some multi-modal models (like Gemini or GPT-4V) are not natively available on AWS

But with SageMaker + HuggingFace, you can self-host open multi-modal models.

Tools to Explore

- Amazon Bedrock Agents – for orchestrating input/output chaining

- Textract + AnalyzeDocument – for tables, forms, and OCR

- HuggingFace CLIP models on SageMaker – for image-text alignment

- Amazon Rekognition – for object and scene detection in images

- AWS Step Functions – to sequence multi-modal flows

Conclusion

AWS isn’t offering “GPT-4 Vision” yet—but you can build your own multi-modal systems by combining best-of-breed services.

The key isn’t one tool, it’s orchestration.

Use AWS services like Bedrock, Textract, Polly, OpenSearch, and SageMaker together to deliver intelligent, multi-sensory applications today.