Introduction

Building GenAI apps sounds expensive.

And sometimes, it is, especially if you jump straight into fine-tuning LLMs or spinning up GPU clusters without a plan.

But here’s the good news: AWS offers several ways to run GenAI workloads cost-effectively if you know where to look.

In this post, we’ll share practical strategies to build, test, and deploy GenAI applications without burning your cloud budget.

The 3 Major Cost Drivers of GenAI on AWS

Before we talk savings, let’s understand where the money goes:

1. Model Inference Costs

- API calls to foundation models (e.g., Bedrock pricing per 1K tokens)

- Hosted endpoints (e.g., SageMaker real-time inference)

2. Training & Fine-Tuning Costs

- GPU compute (ml.p3, ml.g5, etc.)

- Data preparation, versioning, monitoring

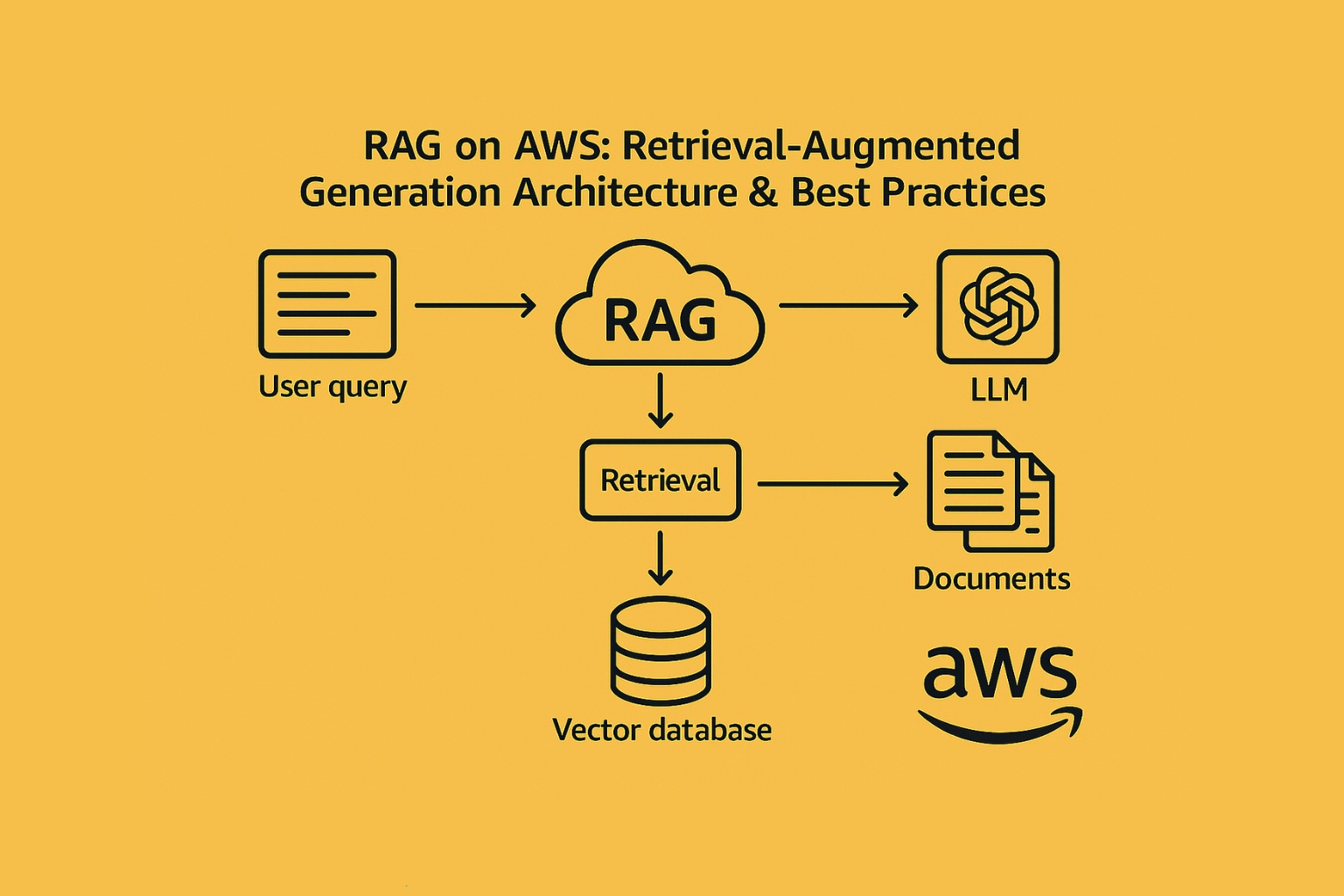

3. Data Storage & Retrieval

- Storing embeddings, vector indexes, logs (S3, OpenSearch, RDS)

7 Cost-Saving Strategies for GenAI on AWS

1. Use Bedrock Instead of Hosting Your Own Models

- Bedrock is serverless—you only pay per use

- Skip GPU provisioning, scaling, and monitoring

- Perfect for bursty inference patterns

Example: For a chatbot getting ~200 queries/day, Bedrock API is cheaper than running a 24/7 GPU instance.

2. Use Batch Inference Where You Can

- Swap SageMaker real-time endpoints with Batch Transform

- Process thousands of inputs with less compute overhead

- Ideal for:

- Document summarization

- Dataset tagging

- Nightly analytics

3. Start with SageMaker JumpStart

- Test pre-trained models (Flan-T5, Falcon, Stable Diffusion)

- No GPU spin-up required

- Feasibility first, fine-tuning later

JumpStart = try before you train.

4. Use Spot Instances for Fine-Tuning

- Up to 90% cheaper than on-demand

- Best for non-time-sensitive jobs like training or embeddings

- Use Managed Spot Training for automated recovery

5. Use Titan Embeddings Instead of OpenAI for Vector Workflows

- AWS-native and cost-effective

- Cheaper per embedding compared to OpenAI’s text-embedding-ada

- Keeps your data within AWS

- Natively integrates with OpenSearch and S3

6. Right-Size Your Infrastructure from Day One

- Don’t POC with g5.12xlarge, start small (e.g., g5.xlarge)

- Enable auto-scaling on endpoints

- Monitor usage patterns with CloudWatch dashboards

7. Turn Off Idle Endpoints

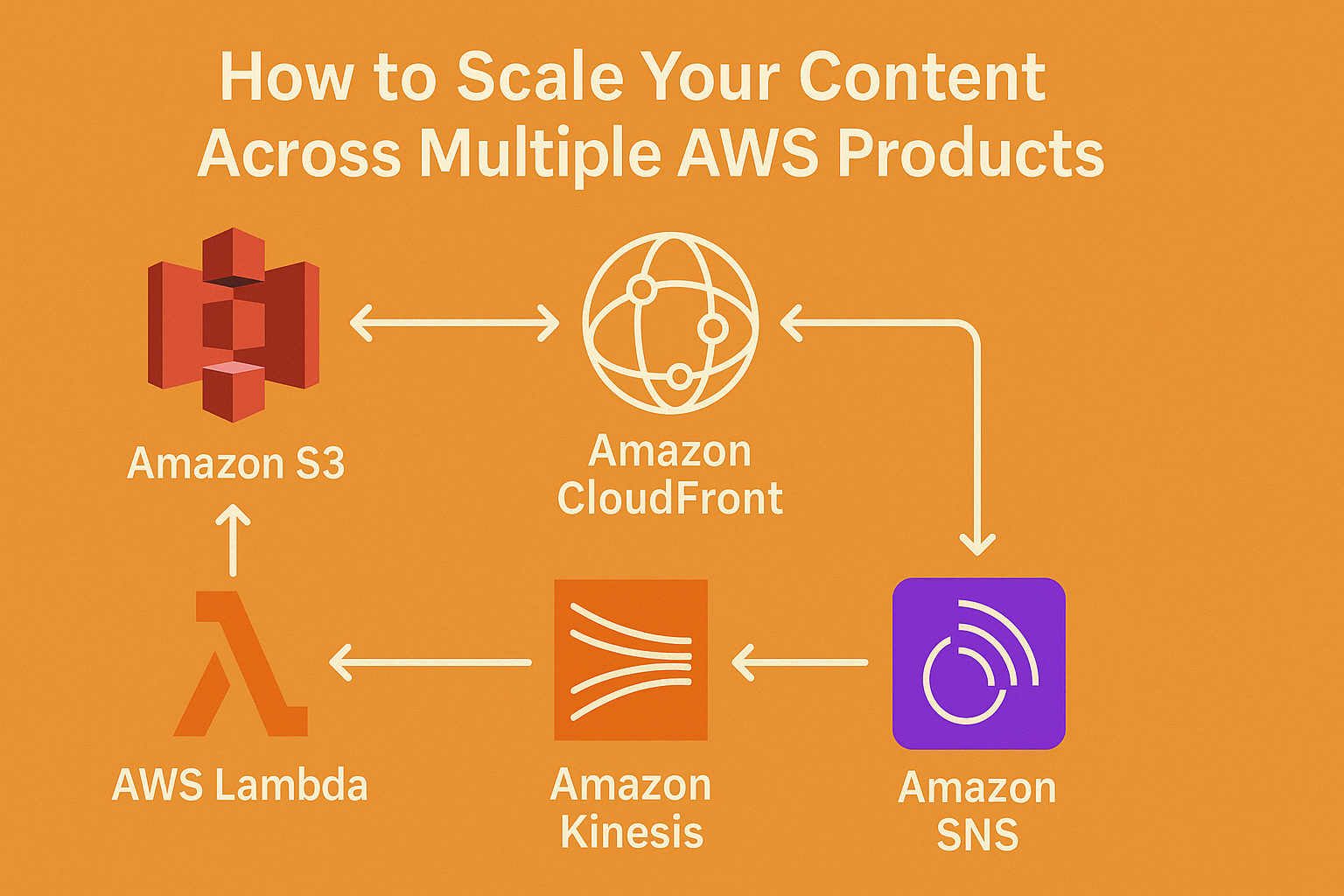

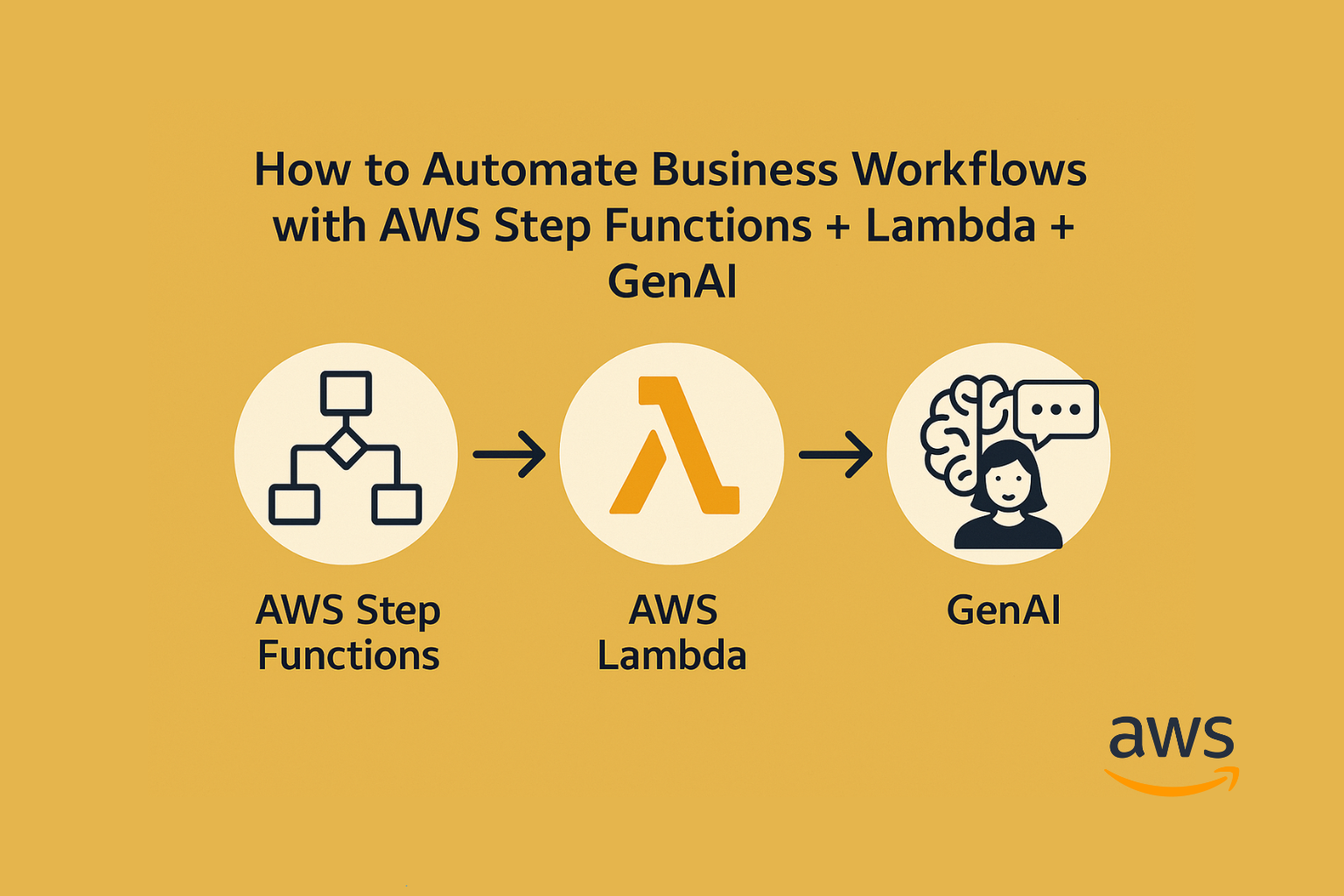

- Use Lambda + Bedrock API for on-demand queries

- For internal tools, schedule auto start/stop scripts

- No point in paying for silence

Bonus Cost-Saving Tips

- Enable CloudTrail + Cost Explorer to catch spikes

- Use Cost Anomaly Detection to alert you to billing surprises

- Pre-cache common responses to minimize repeat API calls

Conclusion

GenAI on AWS doesn’t need to be an enterprise-only affair.

With smart architectural choices, startups and teams can deploy and iterate GenAI products for hundreds, not thousands, of dollars a month.

It’s not about being cheap. It’s about being smart.

Want to see a GenAI architecture diagram or deployment pack tailored for AWS?

Contact us for more details.